10.1 Sensitivity and specificity

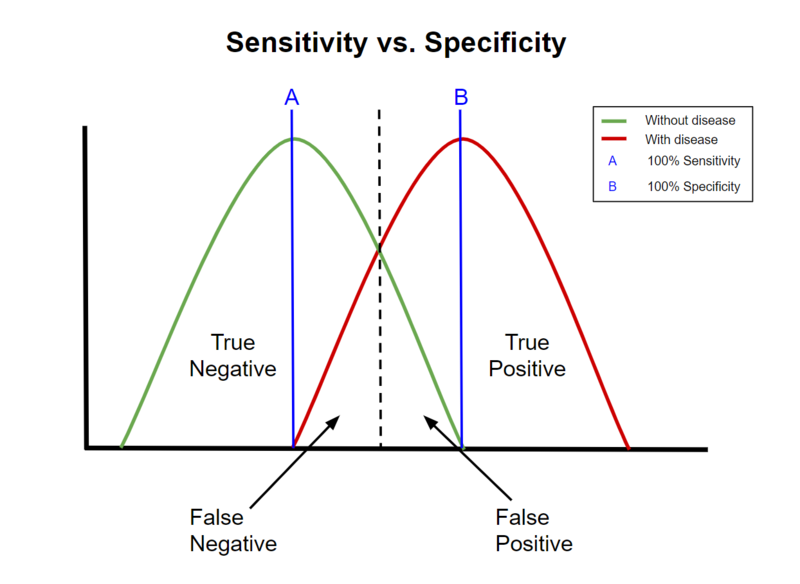

The sensitivity of a clinical test refers to the ability of the test to correctly identify those patients with the disease. A test with 100% sensitivity correctly identifies all patients with the disease. A test with 80% sensitivity detects 80% of patients with the disease (true positives) but 20% with the disease go undetected (false negatives).The specificity of a clinical test refers to the ability of the test to correctly identify those patients without the disease. Therefore, a test with 100% specificity correctly identifies all patients without the disease. A test with 80% specificity correctly reports that 80% of patients without the disease test negative (true negatives) but 20% of patients without the disease are incorrectly identified and test positive (false positives).No clinical test is perfect and in general there is an inverse relationship between sensitivity and specificity. Also, quite often there is an overlap between health and disease for the parameter being measured. This makes it difficult to decide where a reference interval for a test should be. For example, in the figure below, setting the reference limit to point A will mean that the test will have good sensitivity but poor specificity, while setting it at point B will mean the specificity is better but sensitivity is poor.

The sensitivity of a clinical test refers to the ability of the test to correctly identify those patients with the disease. A test with 100% sensitivity correctly identifies all patients with the disease. A test with 80% sensitivity detects 80% of patients with the disease (true positives) but 20% with the disease go undetected (false negatives).The specificity of a clinical test refers to the ability of the test to correctly identify those patients without the disease. Therefore, a test with 100% specificity correctly identifies all patients without the disease. A test with 80% specificity correctly reports that 80% of patients without the disease test negative (true negatives) but 20% of patients without the disease are incorrectly identified and test positive (false positives).No clinical test is perfect and in general there is an inverse relationship between sensitivity and specificity. Also, quite often there is an overlap between health and disease for the parameter being measured. This makes it difficult to decide where a reference interval for a test should be. For example, in the figure below, setting the reference limit to point A will mean that the test will have good sensitivity but poor specificity, while setting it at point B will mean the specificity is better but sensitivity is poor.