51 Policy and program evaluation

Rick Cummings

Key terms/names

accountability, cultural safety, ethics, evaluation, evaluative thinking, evidence, formative/summative, improvement, Indigenous evaluation, merit, monitoring, policy, principles, program, stakeholder, standards, worth

Introduction

Evaluation is an essential element of high-quality public policy and program development and implementation.[1] It serves a range of purposes, including demonstrating the extent to which government interventions improve the wellbeing of individuals and society, holding the public sector accountable for its expenditure and activities, and identifying improvements in public policies and programs.

At the organisational level, evaluation is captured well in the concept of evaluative thinking, which comprises focusing on what an organisation wants to learn, how it can best assemble evidence about this, what strategies it will use to learn from the evidence and how to apply lessons to improve organisational performance.[2] At the individual level, it is more appropriate to use the term ‘evaluative reasoning’ as ‘the process of synthesizing the answers to lower- and mid-level questions into defensible judgements that directly answer the high-level questions’.[3] At both levels, evaluative thinking or reasoning is more than just critical thinking as it requires creative thinking, inferential thinking and practical thinking,[4] as well as political thinking. More recently, an awareness has emerged about how to think culturally about evaluation and how this affects practice.

This chapter introduces the key concept of evaluation as it applies to public policy and programs. This is done through identifying the conceptual basis of key elements and issues, and then exploring how these have developed over time and how they apply in practice. Evaluation has had a rapid evolution since it was formalised in the 1960s so there is a lot to cover. Given the breadth of this topic, it is not possible to cover all in depth, so references are provided for you to further explore key concepts and issues.

What is evaluation of policy and program?

Policy is not well defined in the literature – its meaning is often context specific. One approach is to consider four different perspectives on policy: an authoritative choice by government; a hypothesis describing a cause-and-effect relationship; the objective of government action; and a public value statement.[5] For the purposes of this chapter on evaluation, it is most helpful to approach a public policy as an explicit (written) decision to have the government act broadly and over time in relation to society at large and usually through public agencies and officials to deal with a real or perceived social issue and aiming to create a desired state of affairs. This definition assigns a workable scope on what a policy is so that it has the necessary components to enable evaluation to be carried out.

A program is also defined in many ways but can be described as a group of related activities undertaken by or for government that intends to have a specific impact or deliver a set of defined outcomes.[6] Policies are usually comprised of, and delivered by, a set of related programs.

In its broadest sense:

[evaluation] refers to the process of determining the merit, worth, value, or significance of something … the evaluation process normally involves some identification of relevant standards of merit, worth, or value: some investigation of the performance of the evaluand [the entity being evaluated] on those standards; and some integration or synthesis of the results to achieve an overall evaluation or set of evaluations.[7]

Evaluation serves several purposes and as a result there are a number of variations in how it is defined. For example, if the perspective of the evaluation is to meet the needs of stakeholders, Patton’s definition might be more suitable: ‘Program evaluation is the systematic collection of information about the activities, characteristics, and outcomes of programs to make judgements about the program, improve program effectiveness and/or inform decisions about future programming’.[8]

From a government perspective, evaluation can be seen as:

the systematic and objective assessment of the design, implementation or results of a government program or activity for the purposes of continuous improvement, accountability, and decision-making. It provides a structured and disciplined analysis of the value of policies, programs, and activities at all stages of the policy cycle.[9]

In his text, Program evaluation: forms and approaches, Owen provides two definitions of evaluation and argues the first is best seen embedded in the second:

- Evaluation as the judgment of worth of a program.

- Evaluation as the production of knowledge based on systematic enquiry to assist decision-making about a program.[10]

There are clearly common themes in these definitions. First, evaluation is undertaken to make a judgement about the worth or merit of a program or policy. Evaluation is therefore charged with arriving at conclusions and making judgements. This extends its role beyond just providing information to decision makers.

Second, evaluation focuses on the intervention (the evaluand), be it a program or a policy. In this sense, it is different from policy research, which attempts to test a hypothesis about a social issue; evaluation focuses on the program or policy that has been implemented to tackle the issue.[11] Both policy research and policy evaluation are critical stages in the policy cycle and will be more closely examined later in this chapter.

Third, evaluation involves identifying the key questions to be addressed about the program or policy, selects the best methods for collecting and analysing data to answer these questions, and determines the most appropriate ways of communicating the results of the analysis and the judgements based on them to relevant audiences. As will be seen, the process of planning an evaluation study is best done in consultation with the stakeholders of the program or policy, and with their active participation in the conduct of the study.

How does evaluation fit into the policy cycle?

Evaluation is commonly included as one of the eight key elements in the policy cycle.[12] Although it is often placed at the end of the cycle, most evaluators take a broader perspective that evaluation, in different forms, may be valuable and can be tailored to be conducted at almost any time in the lifecycle of a program or policy.[13] The primary distinguishing difference is whether the evaluation is focused on formative questions about how the policy or program is being developed or implemented and can be improved, or on summative questions about whether it is achieving its intended outcomes and to address issues of accountability.

A number of state and territory governments and Commonwealth government departments have developed guides or frameworks for conducting evaluation studies in their jurisdiction. They generally identify the following three categories of evaluation:

- formative/developmental – examines how a policy or program may be developed or improved given changed circumstances. Studies of this type might research the need for the policy, including who needs it, the scale of need and how to best meet the need; what is the research evidence about the social issue and ways to address the issue or to develop a business case.

- process – examines implementation by collecting data on inputs and activities, focuses on what is working and what is not, and ways to improve the policy or program. It may also examine the extent of stakeholder and target population engagement as well as achievement of short-term outcomes.

- outcome/impact – examines the policy or program when it has been in operation sufficiently long to produce the intended outcomes or impact, and thus to make judgements about its overall worth or merit.

These different categories of evaluation serve different purposes, but they all strive to apply evaluative thinking to the policy process. Evaluation is a utilitarian activity. It is done to serve specific purposes of which four have been identified: program and organisational improvement; oversight and improvement; assessment of merit and worth; and knowledge development.[14] The first two of these address primarily formative questions related to the operation of the policy or program and aim to improve it. They would generally fit under the formative/development or process categories of studies. The third purpose is largely summative in nature and would apply in an outcome/impact study. Studies are rarely undertaken solely for knowledge development purposes. In reality, nearly all evaluation studies contribute to knowledge development, even if this is not a stated purpose. In this way, evaluation acts to educate different stakeholder groups using independently produced evidence, and this often results in increased consensus about the program or policy.

Evaluation is closely linked to monitoring. These are seen as complementary processes in public policy. Monitoring is defined as:

the planned, continuous, and systematic collection and analysis of program information able to provide management and key stakeholders with an indication of the extent of progress in implementation, and in relation to program performance against stated objectives and expectations.[15]

In brief, monitoring is the largely routine collection of operational quantitative information by staff to provide management with performance information to highlight issues and identify improvements. Unlike evaluation, it generally doesn’t make judgements about the relevance or success of the program or policy. Increasingly, monitoring and evaluation processes for a particular program or policy are developed together in a monitoring and evaluation framework to ensure data is collected and analysed consistently, and reported in a timely fashion. Very recently, the concept of learning has been added to create an approach termed monitoring, evaluation and learning (MEL). This approach views monitoring as a comprehensive process to illuminate what is working and what isn’t, evaluation as a selective process that takes a deeper and holistic analytic examination on which to base judgements of worth and merit, and learning as a continual process using relevant, timely evidence to make decisions. This is another, more structured way of defining evaluative thinking.

Who conducts the evaluation and why?

Evaluation studies are normally conducted by a team of experts formally trained in evaluation. They may be internal to the agency, external consultants or, in some cases, a combination of the two, in which external consultants can provide additional resources as well as expertise not available internally and internal staff can provide in-depth knowledge of the agency as well as easier access to internal information. Sometimes a mixed team is chosen to provide the opportunity for evaluation capacity building within the agency. Some agencies establish internal evaluation branches to enable evaluation studies to be done internally but by trained evaluators not directly responsible to staff in charge of the policy or program. Generally, these branches report to the agency head to avoid the conflicts of interest that would appear if they evaluated policies or programs overseen by the office to which they report.[16] The choice of which arrangement is most suitable is based on a range of factors but key to the decision about who conducts the evaluation is a number of issues such as requisite expertise, perceived independence and objectivity, knowledge of the field, available funds and contractual requirements.[17] The Commonwealth Department of Finance provides a useful ‘decision tree’ for deciding who should conduct a particular evaluation study.[18] For some time, there has been a growing interest in having input from program participants and others with lived experience into the design and conduct of an evaluation study. In Australia and New Zealand, there is a cultural overlay that is expressed as a desire to have evaluators familiar with Indigenous cultures where appropriate.[19] Increasingly, this leads to having evaluators from Indigenous backgrounds involved in studies of policies and programs that target these Indigenous peoples, which in reality is most policies and programs (see ‘Evaluation challenges in government’ below).

The role of stakeholders in evaluation

As evaluation is a predominately utilitarian activity with enlightenment and decision making as key purposes, it is essential to involve stakeholders in the evaluation process. This is not just at the reporting stage but also throughout the life of the evaluation study. To encourage stakeholder involvement in an evaluation study, agencies often establish evaluation steering committees to work with the evaluation team to plan the study, assist in providing access to information, act as a sounding board for decisions on the evaluation and be a recipient of evaluation reports, both written and oral. These committees are usually comprised of the key stakeholders in the policy or program and report to the senior executive of the agency commissioning the study.

Theories and approaches

The discipline of evaluation has been through a sustained period of growth around the world for the past 50 years during which it has developed a strong theoretical base and a wide range of approaches and methods. The evolution of evaluation is described below by exploring the history of the theories of evaluation and then discussing the different approaches to evaluation. The range of methods used in evaluation studies is discussed in a following separate section. Readers will find there is a range of views in the literature on what constitutes an evaluation theory, approach or method, and this chapter adopts one perspective to try to provide some clarity to this area. There are linkages between theories, approaches and methods that are gradually developing into schools of evaluation.

Evaluation theory

As in many disciplines, there is no single theory of evaluation but rather a range of theories based on different assumptions and priorities. There have been three major stages in the development of evaluation theories:

- The first stage, during the 1960s, was focused on providing the conceptual basis for valuing and knowledge construction, and using rigorous scientific methods for doing so.

- The second, commencing in the 1970s, emphasised the need to ensure evaluation study findings were used, and focused on methods to promote utilisation.

- The third stage, from the 1980s, identified weaknesses in the prior theories and developed the idea of program theory: that is, understanding why a program or policy is expected to succeed.[20]

It is arguable that a fourth stage of evaluation theory is currently under development that recognises the cultural basis of public policy, and therefore evaluation, and argues for placing cultural perspective at the centre of evaluation to ensure that cultural knowledge and practice is incorporated in the design and conduct of evaluations. (This topic is addressed in more detail in ‘Evaluation challenges in government’ below.)

Alkin and Christie (2004) have very helpfully conceptualised and presented the theories of evaluation in an ‘evaluation theory tree’, which has two roots: accountability and systematic social inquiry. The first is the need to account for the resources and actions used in policies and programs (the rationale for evaluation), whereas the second focuses on the systematic use of justifiable methods for determining accountability (the methods of evaluation). Built on these roots is a tree with three main theory and practice branches of evaluation: ‘use’, ‘methods’ and ‘values’.[21] A fourth branch has been suggested, which focuses on ‘social justice’.[22] The smaller branches of this tree are the various specific theory and practice perspectives advocated by a range of evaluators.

Evaluation approaches

The different approaches to evaluation can be grouped into three main categories: positivist, constructivist and transformational.[23] Each of these is described below.

The positivist approaches, which generally fit into the methods branch of the ‘evaluation theory tree’, are based on obtaining an objective view of the causes and effects of a policy or program using rigorous methods. They stem from experimental approaches to research, and use associated research methods, including randomised control trials and quasi-experimental designs in attempting to identify causal pathways by controlling as many variables as possible. The focus of the evaluation study is on methods, particularly careful data collection and analysis, to eliminate or reduce bias to ensure findings are as valid, reliable and robust as possible. Positivist approaches have been promoted as the ‘gold standard’ of evaluation, but in practice they are very limited in their application, because, for ethical, practical or political reasons, few policies or programs can be structured and implemented in such a controlled environment. In addition, studies using this perspective are often accused of ignoring the values of the policy or program, its specific context and the cultural perspectives of the participants. Finally, this approach raises the question of where the boundary is between policy research and policy evaluation, with many evaluators putting experimental designs under policy research. A reaction to these criticisms has led to the development of realist evaluations, which are based on the view that there are regular patterns or mechanisms by which policies and programs operate to achieve changes within specific contexts. In contrast to experimental designs, realist studies do not attempt to generalise their findings widely.[24]

A second category of approaches is termed constructivist. These approaches share a common view that there is no objective truth about the effectiveness of policies and programs. Instead, the varying viewpoints of different stakeholders in relation to the operation and effectiveness of a policy or program form the basis for assessing its merit or worth. Evaluators in this school of practice are most concerned with ensuring that stakeholders are directly involved in the planning and conduct of the evaluation study, and the study proceeds as a partnership between evaluators and stakeholders. Although rigorous methods are still applied under this approach, there is much greater flexibility in the methods used to ensure they consider the needs of stakeholders over the course of the study. There is also a much greater recognition that policies and programs can change over time even within the timeframe of an evaluation study. There is a tendency for constructivist studies to focus more on issues of improvement rather than summative judgements. In linking this back to the ‘evaluation theory tree’, constructivist evaluations sit best on the ‘use’ branch. This branch focuses on who will use the findings of the evaluation and how they will use them. The active participation of stakeholders is undertaken to educate them during the study and regularly communicate with them on the progress of the study and its findings (rather than only in a final report) in order to increase the likelihood they will use the study findings.[25] Collaborative and participatory evaluation studies are advocated for and widely used with Indigenous communities and in international development settings.

Transformative evaluations focus on marginalised and vulnerable groups and aim to tackle the imbalance in power structures to further social justice and human rights. Adopting a transformative approach places the evaluator as an active agent to progress social justice. Stakeholder values are a major focus of transformational studies, especially the values of marginalised groups. Furthermore, transformative studies involve direct participation by these groups in the various phases of an evaluation study, often including co-design of the study and ongoing interaction between these groups and the evaluators. The scope of the study is expanded to look at where the existing systems and power structures are not working to foster social justice. This category ‘has implications for every aspect of the research methods, from the development of a focus to the design, sampling, data collection strategies, data analysis and interpretation, and use of the findings’.[26] Mixed methods (including both quantitative and qualitative data) are nearly always required and should include adopting culturally appropriate methods using members of the community to collect data in the local language, understanding the role of the community, sharing data with the program participants, seeking guidance on how best to interpret the data and present findings to the local community, and respecting cultural perspectives on the use and ownership of data. Approaches in this category fit best on the values branch of the ‘evaluation theory tree’ and are increasingly used in evaluations with Australian Indigenous groups.

Methods

Policy research and evaluation share many of their methods but they use them for different purposes. Generally, social or policy research is undertaken to investigate a social issue, such as unemployment, smoking or health care, and try to better understand what are the causes of the issue and what interventions might be useful to deal with the issue, such as clinical treatments, education or financial support. Policy research might be defined as ‘the process of conducting research on, or analysis of, a fundamental social problem in order to provide policymakers with pragmatic, action-oriented recommendations for alleviating the problem’.[27] One of the aims of policy research is generalising the results to a wider population, and this research is often done using experimental designs.

Evaluation, on the other hand, focuses on the policy or program developed and implemented to tackle the social issue in a specific jurisdiction with a particular target population. It aims to provide evidence to stakeholders, especially decision makers, on how well the policy or program is being implemented and to what extent it is achieving its intended outcomes for its target population as well as identifying any unintended outcomes. The findings of an evaluation are not designed nor intended to be generalised to the wider population although a wider audience than the program or policy stakeholders may be interested in the findings in considering similar social issues in their jurisdiction.

Evaluation study designs

Although there are several possible designs for an evaluation study, it is generally not good practice to choose the design too early in the planning of the study. This is because there are numerous questions, in particular the purpose of the evaluation study, to be answered about the study first. (These questions are covered in ‘Evaluation process’ below.)

Evaluation designs can be categorised into three groups: exploratory, descriptive, and experimental.

- In the exploratory category, which aims to identify the need for a policy, who needs it and where, as well as whether it is suitable for evaluating, are designs such as needs assessment, research synthesis, review of best practice, and logic development.

- The descriptive category aims to explore the processes and activities within an existing policy or program and comprises designs such as case study, empowerment, participatory and developmental evaluation, and most significant change.

- Finally, experimental studies focus on assessing if the intended outcomes have been achieved and, if so, are a result of the policy or program, and use counterfactual analysis, randomised controlled trials, qualitative impact assessment, cost–benefit or cost-effectiveness analysis, and social impact or social return on investment metrics to examine the outcomes and their causes.

An evaluation may use one or more designs, each dealing with a specific key evaluation question. The choice of the design should be based on the information required to answer the key evaluation question and should be negotiated with the stakeholders. Evaluators undertaking any of these designs need to be properly trained in the particular design.

Types and sources of data

The data needed in an evaluation study is determined by the key evaluation questions and the chosen design. A section of the evaluation plan should identify the data required to answer each question in the evaluation plan, as well as identify from where it can be collected and with what methods. This enables the study to be implemented efficiently and to ensure data can be accessed and collected when necessary. Data can be divided into quantitative and qualitative categories. Quantitative data is normally in the form of numbers and is open to a range of analysis processes and presentation approaches. This type of data is often most useful to answer questions about what happened in the policy or program, as in measuring outputs (how many workshops were conducted) or outcomes (which students improved their reading skills and by how much). Qualitative data, on the other hand, is often the basis for developmental and process evaluations and is usually in the form of text, but may also be in video, audio or pictorial. It is extremely valuable in capturing and presenting the views of stakeholders and the lived experience of participants. It generally focuses on the ‘why’ questions in an evaluation plan: for example, why did the target group not attend the workshops; why did some students improve in reading and others did not. Both categories of data have quality criteria to ensure data is collected appropriately and analysed in a rigorous manner. Nearly all evaluation studies would make use of both quantitative and qualitative data to fully consider the key evaluation questions.

Another method of categorising data is to look at its source. Three categories are generally recognised depending on how original the data is and the proximity to its source: primary, secondary, and tertiary. Primary data is that which records events or evidence as they are first described with little or no interpretation or commentary. Secondary data sources offer analysis of primary data in order to explain or describe it more fully. This is usually done to summarise, synthesise, interpret or otherwise add value to the data. Tertiary sources are one step further removed from the original data and focus on bodies of secondary data in order to compile it across time, geography or theme. Table 1 provides some examples of different types of data from different sources.

| Primary (collected first-hand) | Secondary (previously collected) | Tertiary (summaries of existing data) | |

|---|---|---|---|

| Quantitative |

Surveys Attendance records Student marks |

Crime statistics Hospital records |

Meta-analysis Databases |

| Qualitative |

Focus groups Interview transcripts Case studies |

Biographies Journal articles |

Literature review Systematic review |

Principles, standards and ethics

The first evaluation societies were formed in Canada in 1980 and the United States in 1986, and there are now more than 150 national and regional evaluation associations and societies worldwide.[28] In 1987, the Australian Evaluation Society (AES) was the third to be established. In New Zealand, the Aotearoa New Zealand Evaluation Association (ANZEA) was formed in 2006 and Mā Te Rae – Māori Evaluation Association in 2015. These professional bodies have provided a very useful forum for the development of training, research, accreditation and professional standards for the discipline.

Principles and standards

Of particular note internationally is the set of Joint committee program evaluation standards, which comprises 30 standards of practice in five dimensions of evaluation quality: utility, feasibility, propriety, accuracy and evaluation accountability.[29] The Standards were developed by a panel of leading evaluators and have been tested and revised over a number of years. They are widely used to guide evaluators and evaluation commissioners in identifying evaluation quality. In a complementary document, the American Evaluation Association has produced the Guiding principles for evaluators to guide evaluators in areas such as systematic inquiry, competence, integrity, respect for people, and common good and equity. These principles are very useful as a basis for formal education and professional development of evaluators. Within Australia, a similar resource, the Evaluators’ professional learning competency framework was developed by the AES in 2013. This is used as the basis for professional development conducted by the AES. ANZEA has developed a similar set of standards and competencies to be used in Aotearoa New Zealand.

Ethics within evaluation

Evaluation studies collect information from and about people that needs to be protected and treated with appropriate respect. This involves issues of privacy, confidentiality and anonymity as well as cultural safety. As such, evaluation studies should be required to meet the same ethical principles as pure and applied research. To address this in Australia, the AES developed a Code of ethics as well as Guidelines for the ethical conduct of evaluations. These are widely used by agencies commissioning evaluation studies to ensure evaluators practise ethically.

Given that evaluation studies of public policies and programs invariably involve interacting with people, often those in vulnerable situations, it is an increasingly common requirement for studies to be formally submitted for assessment by human ethics committees. The requirement to do so and where the assessment takes places vary due to a number of factors. Where a study is being conducted by university staff, assessment by the university’s human ethics committee is almost always required. If the study focuses on state-level programs in education or health, most states and territories have human ethics committees in these fields. If the policy or program involves Indigenous peoples, the Australian Institute of Aboriginal and Torres Strait Islander Studies (AIATSIS) in Canberra has a Research Ethics Committee that is available to any agency. AIATSIS also has a Code of ethics and a Research ethics framework to guide agencies and evaluators to conduct quality and culturally appropriate studies.

All of these resources and processes apply similar standards for practice, although they deal with slightly different issues. They form a very useful package of resources to guide both evaluation commissioners and evaluation practitioners to conduct high-quality ethical and culturally safe evaluation. They can also be used as checklists to help identify and rectify poor practice. Ethics review generally results in an improved study design and data collection and analysis because the evaluators are required to examine the impact of their methods more deeply. It is much better to have poor ethical practice identified and rectified before the study takes place. If poor practice is identified later, it is likely to strongly undermine the credibility of the study as well as tarnish the reputation of both the study commissioners and the evaluators.

Evaluation process

Although each evaluation is unique and is planned and conducted to suit the issues and questions about a specific policy or program at a specific time in its life cycle, there are some generally agreed processes for evaluation studies. The general process can be summarised in four steps: delineating, obtaining, communicating and applying.[30] It is worth noting that the four steps may not always operate in a strictly linear fashion but rather as loops within an overall evaluation study. These steps are described in more detail below and how these steps are applied in an actual study is provided in the box.

Delineating

The first step in an evaluation study, delineating, is to plan the study in consultation with key stakeholders. The planning of an evaluation study is critically important not only to ensure the best possible study design and implementation but also because it acts to educate stakeholders about both the policy or program and the study. Stakeholders for any public policy or program will come from several agencies or groups and will bring with them differing perspectives, knowledge, experience and expectations. As such, the planning of the study needs to take account of these differences and seek to arrive at some common understanding or consensus about the policy or program, what it hopes to achieve and what the intended focus of the evaluation study is. To do this, it is essential that key stakeholders of the policy or program are identified and directly involved in the planning of the study. This includes agreeing on a shared understanding of what the policy or program is intending to achieve and how it intends to do this. As discussed, one of the most useful tools to achieve this is a logic model. It is also essential to scope the study, starting with why it is being done and for whom, and then develop a set of key evaluation questions and sub-questions. These high-level questions should be developed to meet the information needs of the stakeholders as well as to apply appropriate social justice and cultural perspectives, such as equity, human rights and cultural recognition. The data needed to answer these questions, how it will be collected and from whom, as well as the analysis and reporting processes, should also be considered in this stage. The result of the delineation step is a formal evaluation plan that is agreed upon by the evaluator and the key stakeholders. The plan becomes the map by which the evaluation study is expected to be conducted and through which stakeholders and evaluators can hold each other accountable. Templates for evaluation plans are available in a number of jurisdictions in Australia.[31]

Obtaining

The second step in an evaluation study is obtaining, analysing and synthesising the data needed to answer the key evaluation questions. It is generally accepted as good practice to start with identifying existing data, some of which is created during the administration of the policy or program and some from monitoring processes. This data is often very relevant to the policy or program, is readily available and usually does not cost. Where new data needs to be collected, evaluators have all the tools of research to draw upon including surveys, interviews, site visits, direct observation, focus groups, and so on. Evaluations should be undertaken by teams who are trained in research methods for both qualitative and quantitative data. This data then needs to be analysed using accepted analysis procedures in direct reference to the key evaluation questions. This will provide the evidence to be presented in reporting the results of the evaluation to stakeholders.

Communicating

Traditionally, evaluation studies were reported in printed final reports. Research over the years has shown this narrow view of reporting is not successful in convincing stakeholders to use the evidence in making decisions about a policy or program. Instead, it is now widely accepted that engaging the stakeholders throughout the evaluation study and communicating results to them on a more regular basis leads to higher levels of use of the information.[32] This builds on the argument presented earlier about the benefits of stakeholder engagement. It also presents opportunities for the evaluation study to be designed to ensure evidence can be presented and discussed at appropriate times during the study. In many cases, this leads to refinements in the evaluation plan to enable new or supplementary questions to be considered, as new issues come to light.

Applying

The final step in an evaluation study is for the stakeholders to make use of the evaluation study results. In doing this, there is often great benefit in seeking the involvement of the evaluators, as they now have a unique level of knowledge about the policy or program and are often well regarded by program staff and participants.[33] Evaluation results, as a source of information about a policy or program, compete with a range of other sources including the media, personal experience, political pressure, and lobby groups. As such, decisions do not always follow directly from evaluation studies because the process of using evidence to influence decision making is more protracted and implicit.[34] As mentioned above, the engagement of stakeholders in the study is a powerful tool available to enhance the likelihood evaluation results will influence their thinking.

Evaluation study of Western Australian sexual health and blood-borne virus applied research and evaluation network (SiREN) by John Scougall Consulting Services

Delineating

The structure, processes and findings of this study are available in two public documents (https://siren.org.au/about-us/independent-evaluation). Can you find evidence of where the object of this study, a research and evaluation network (SiREN) at Curtin University, is clearly identified, and the purposes and/or objectives of the study are listed? By examining the key evaluation questions provided in the evaluation plan in Appendix 4, can you conclude that this is a process study, an outcome study or both? Does the evaluation plan adequately set out each of the following: the focus of the study, the evaluation reference group, the study methodology, information collection processes and analysis, the reporting process and the study timeline? The plan, which was developed with the stakeholder reference group, also sets out the process for developing a ‘logic model’ for the program and explains its reasoning for adopting a realist evaluation approach (Appendix 9). Is there evidence in the reports that the logic model was used in the study, and that a realist approach was applied? If so, where?

Obtaining

As shown in the evaluation plan, this study used five data collection methods: desktop analysis of existing documents, development of a program logic, stakeholder survey, interviews and a case study. How well are these explained and how are they used to engage the stakeholders in the reference group over the course of the study?

Communicating

This study produced a number of reports over its five-month duration. How well are these linked to the evidence collected and analysed in the study and the key evaluation questions? How are the reports used to keep the stakeholders informed and engaged during the duration of the study?

Applying

The regular reporting process enabled the reference group to engage in meaningful discussion with the evaluators and to make changes to the program where appropriate without waiting for the final report. In this study, the evaluator was not involved in future decisions about SiREN and it is unclear to what extent the evaluation study findings influenced the future of SiREN.[35] Can you identify evidence on the SiREN website that any of the recommendations of the study have been applied?

A key principle of evaluation is that the information produced by the study is expected to be used by the organisation and other stakeholders, but there is a common misconception of what constitutes use of information. It is generally thought of as being used as a basis for decisions. This is certainly one use, but the situation is more complicated, because stakeholders are open to a wider range of uses. It is important to understand these wider uses in order to plan and implement effective evaluation studies. The research literature identifies four types of use:

- process use – where stakeholders learn about the strategy and the processes of monitoring and evaluation by being involved in their development and implementation and develop a sense of engagement, ownership and self-determination

- conceptual use – stakeholders are educated about the logic of the strategy and develop a shared understanding of the strategy with other stakeholders

- instrumental use – stakeholders are informed about how the strategy is performing and the extent to which it is achieving its outcomes and contributing to higher level impacts, so they can make better-informed decisions

- strategic/symbolic use – stakeholders use the information from monitoring and evaluation to promote that a strategy is working or that it is not working.

It is common practice now to plan evaluation studies to maximise all four types of use, because the greater the level of process and conceptual use, the greater the instrumental use. The best way to achieve increased levels of use is to engage stakeholders actively through the monitoring and evaluation processes and to provide findings on a regular basis in a manner that encourages discussion of the findings. In this way, the findings move beyond being passive data to more actively influence the stakeholders.

Of course, this does not guarantee evaluation study findings will be used or used appropriately. There are strong pressures from the political sphere and parties with vested interests to avoid criticism and to select the findings that best suit their interests. It is important to recognise that evaluation operates in and contributes to the political context. At the same time, much can be done to promote wide use and to inhibit misuse by ensuring multiple parties are engaged and committed to the study, the study is conducted transparently and not only findings but also analysis is reported, and where possible the reports are publicly available. These, and other, safeguards should be negotiated in the evaluation plan and contract where appropriate.

Evaluation challenges in government

Logic models

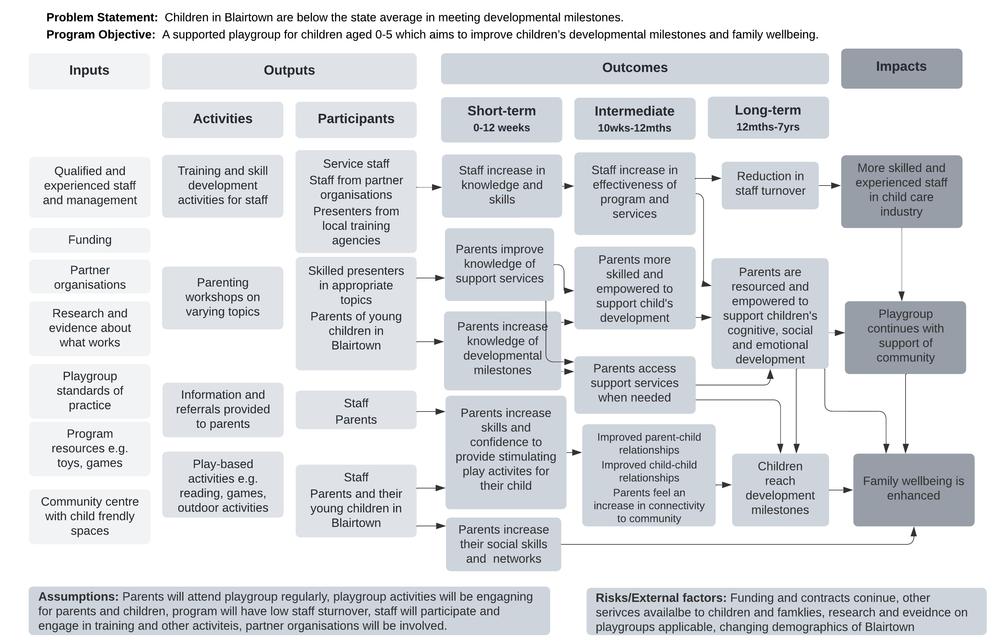

A critical component of evaluative thinking is to ensure that the policy or program is developed based on the best available research applied to the local context. This provides the best chance of success as well as providing a strong basis for evaluating success. High-quality policy research is invaluable for gaining the best understanding of the social issue being dealt with and the range of social theories and past practice that explain how it might be dealt with. This evidence is often viewed as having two components: a theory of change and a theory of action. The first is concerned with the processes by which individuals are expected to change due to the policy or program. This is often based on a social theory developed through social research but may be based on tacit understanding from previous experience. The perspective here is that most public policies and programs have an implicit or explicit theory of change on which they are based: for example, increasing an individual’s knowledge of the dangers of smoking has been shown to change their attitude to smoking leading to them stopping or reducing smoking or preventing them from starting. The combination of this research evidence and the views of key stakeholders can then be applied to develop the most appropriate policy or program. This is often referred to as the program or policy theory of action and explains how the policy or program is structured and implemented to bring about the intended change in individuals or groups. Logic models have been developed as an approach to formalise this process.[36] A logic model is a diagrammatic description of what outcomes a policy or program intends to achieve and how it is intends to do this. An example of a logic model is provided in Figure 1. It is now standard practice to produce a logic model with the participation of stakeholders when developing a new policy or program or when planning an evaluation study. Logic models provide a number of benefits including increasing understanding and consensus among stakeholders, providing a guide for development and implementation, and providing a framework for assessing how well the policy or program is being implemented and to what extent it is achieving its intended outcomes.

As can be seen in Figure 1, a logic model commences with a statement of the social problem as well as the intended objective of the program or policy. The diagram lists the inputs necessary for the program, and then outlines the causative pathways the program intends to support, moving from outputs (what is done and with/to whom) to a hierarchy of intended outcomes (what changes take place in both staff and participants), leading to the broader and longer-term impacts on the wider community over time. In this example the causal pathways form three ‘swimming lanes’, each leading to one or more impacts. In turn, the impacts form a hierarchy in which, working down, skilled and experienced staff are required to ensure the playgroup continues to operate leading to ongoing family wellbeing in the community. The model also identifies several assumptions upon which the program relies, as well as risks or external factors that might confront the program and inhibit the likelihood of achieving its stated objective. As such, a logic model provides a coherent explanation of how change is expected to occur within a policy or program.[37] It creates a series of causal chains between outcomes, outputs and inputs. The success of this causal chain in the particular policy or program can be tested through process and outcome evaluations. The logic model also identifies the key elements of the policy or program that can be monitored during its implementation.

Attribution versus contribution

In developing logic models, there can be outcomes (usually in the short or medium term) that are directly attributable to the outputs of the policy or program, and another set of outcomes (often longer term or impacts) to which the strategy or program contributes. Evaluation studies should identify in which category each outcome fits, as this is critical for data collection and the conclusions of the study. In the Blairtown Playgroup logic model in Figure 1, the increase in staff skills and the increase in parent skills and confidence are outcomes directly attributable to the program, whereas the program can only be expected to make a contribution to staff turnover (long-term outcome) and family wellbeing (impact). As can be seen, causality becomes more difficult to demonstrate as outcomes become longer term, because many other factors are likely to be involved. This is a major issue in particular for evaluating policies as they often have long term outcomes or impacts that are the focus of a number of programs, each of which contributes to, but is not solely responsible for, the outcome or impact.[38]

Indigenous perspective in evaluation

In 2020, the Productivity Commission developed the Indigenous evaluation strategy, which aims:

[to put] Aboriginal and Torres Strait Islander people at its centre, and emphasises the importance of drawing on the perspectives, priorities and knowledges of Aboriginal and Torres Strait Islander people when deciding what to evaluate and how to conduct an evaluation.[39]

This is in line with an international trend to formally recognise the critical role of Indigenous peoples in designing, implementing and evaluating public policy that affects them. This trend has progressed much further in Canada and particularly Aotearoa New Zealand, where the practice is for the evaluation of policies and programs involving Māori to be led by a Māori evaluator. The same argument is now being made in Australia and evaluation practice is moving in this direction. An area of study that supports this is termed ‘decolonising methodologies’; it argues that researchers and evaluators have a responsibility to understand the impact of the colonial past on Indigenous people and to work to rebalance the power relationships between themselves and Indigenous peoples.[40]

As the Productivity Commission further points out, it is necessary to apply such a strategy as a ‘whole-of-government framework for Australian government agencies when they are evaluating both Indigenous-specific and mainstream policies and programs affecting Aboriginal and Torres Strait Islander people’.[41]

The AES has also developed and adopted a First Nations cultural safety framework to educate evaluators and evaluation commissioners on how to ensure their practice safeguards First Nations culture and knowledge. This policy also commits the AES to increasing the capacity of First Nations evaluators to undertake quality evaluation.

This is a complex area in which the development process itself should be led by First Nations people. In line with this, the Lowitja Institute has been producing a number of publications related to Indigenous evaluation to help better understand cultural safety and competence and to avoid deficit-based assumptions. Recently, they have developed a set of four tools for supporting culturally safe evaluation studies.[42] These tools are:

- addressing cultural safety through evaluation

- tackling racism within evaluation

- community-led co-design of evaluation

- critical reflection on evaluation.

Conclusion

This chapter focuses on the theory and practice of evaluation in a policy context. The evolution of this field of inquiry has been outlined and what emerges is a mature discipline of professional practice with a range of theories and practices as in other disciplines. That evaluation and social research share methods is clear but that they serve very different purposes and play different roles in the policy cycle is less well understood. The need for quality evaluation studies in public policy is as important now as ever, particularly in an era of ‘fake news’. The potential of an evidence-informed approach to high-quality public policy development and implementation is considerable, if an evaluative thinking culture in the public sector is supported, promoted and sustained.

As in any discipline, there are a number of important challenges facing evaluation, not least of which is the adoption of an Indigenous perspective within the Australian public sector. The time is ripe for this given the rising wave of recognition of Indigenous rights, respect and self-determination.

References

Alkin, M.C., and C.A. Christie (2004). An evaluation theory tree. In M.C. Alkin, ed. Evaluation Roots, 1265. Thousand Oaks, CA: Sage. DOI: 10.4135/9781412984157

Althaus, C, P Bridgman, and G Davis (2018). The Australian Policy Handbook (6th). Sydney: Allen & Unwin. DOI: https://doi.org/10.4324/9781003117940

Australian Government Department of Finance (n.d.). Who evaluates? Evaluation in the Commonwealth. https://tiny.cc/vsizuz

Conley-Tyler, M. (2005). A fundamental choice: internal or external evaluation? Evaluation Journal of Australasia, 4(1–2): 3–11.

Davidson, J. (2014). Evaluative reasoning, Methodological briefs: impact evaluation 4, Florence, Italy: UNICEF Office of Research.

Funnell, S., and P. Rogers (2011). Purposeful program theory: effective use of theories of change and logic models. San Francisco: Jossey-Bass.

International Organisation for Cooperation in Evaluation (n.d.). VOPE directory. https://ioce.net/vope-directory

Katz, I., B.J. Newton, S. Bates and M. Raven (2016). Evaluation theories and approaches: relevance for Aboriginal contexts. Sydney: Social Policy Research Centre, UNSA Australia.

Loud, M.L., and J. Mayne, eds (2013). Enhancing evaluation use: insights from internal evaluation units. Los Angeles: Sage.

Lowitja Institute (2022). Tools to support culturally safe evaluation: https://www.lowitja.org.au/page/services/tools/evaluation-toolkit

Marjchrzak, A. (1984). Methods for policy research. Thousand Oaks, CA: Sage.

Mark, M., G. Henry and G. Julnes (2000). Evaluation: an integrated framework for understanding, guiding, and improving public and nonprofit policies and programs. San Francisco: Jossey-Bass.

Markiewicz, A., and I. Patrick (2016). Developing monitoring and evaluation frameworks. Thousand Oaks, CA: Sage

Mayne, J. (2008). Contribution analysis: an approach to exploring cause and effect. ILAC methodological brief. https://web.archive.org/web/20150226022328/http://www.cgiar-ilac.org/files/ILAC_Brief16_Contribution_Analysis_0.pdf

Mertens, D.M. (2012). Transformative mixed methods: addressing inequities. American Behavioral Scientist, 56(6): 802–13. DOI: 10.1177/0002764211433797

—— and A.T. Wilson (2012). Program evaluation theory and practice. a comprehensive guide. New York: Guildford Press.

Owen, J. (2006). Program evaluation: forms and approaches. 3rd edn. Sydney: Allen & Unwin.

Patton, M.Q. (2008). Utilization-focused evaluation. 4th edn. Thousands Oaks, CA: Sage.

—— (2018). A historical perspective on the evolution of evaluative thinking. In A.T. Vo and T.Archibald, eds. New directions for evaluation, 158: 11–28.

Pawson, R., and N. Tilley (1997). Realist evaluation. London: Sage.

Productivity Commission (2020). Indigenous evaluation strategy. https://www.pc.gov.au/inquiries/completed/indigenous-evaluation – report

Scriven, M. (1991). Evaluation thesaurus. Thousand Oaks, CA: Sage.

Smith, T.L.L.R. (1999). Decolonizing methodologies: research and Indigenous peoples. Dunedin, New Zealand: University of Otago Press.

Stufflebeam, D.L., and C.L.S. Coryn (2014). Evaluation theory, methods and applications. 2nd edn. San Francisco: Jossey-Bass.

Stufflebeam, D.L., and A.J. Shinkfield (2007). Evaluation theory, models, and applications. San Francisco: Jossey-Bass.

Vo, A.T., and T. Archibald, eds (2018). New Directions for evaluative thinking. New Directions for Evaluation, 158: 139–147

Western Australia Government Department of Treasury (2020). Program evaluation guide. https://www.wa.gov.au/government/document-collections/program-evaluation

Weiss, C. (1998). Evaluation 2nd edn. Upper Saddle River, NJ: Prentiss-Hall.

Yarbrough, D.B., L.M. Shulha, R.K. Hopson and F.A. Caruthers (2011). The program evaluation standards: a guide for evaluators and evaluation users.3rd edn. Thousand Oaks, CA: Sage.

About the author

Dr Rick Cummings is an Emeritus Professor at Murdoch University, where he lectured in policy research and evaluation in the Sir Walter Murdoch Graduate School. He has over 40 years’ experience in planning and conducting evaluation studies of policies and programs in the fields of health, education, training, and crime prevention. Prior to joining Murdoch in 1996, he worked in policy research and evaluation positions in the WA public sector in health, business, and vocational education and training. Rick is a past president of the Australian Evaluation Society and was made a Fellow in 2013.

- Cummings, Rick (2024). Policy and program evaluation. In Nicholas Barry, Alan Fenna, Zareh Ghazarian, Yvonne Haigh and Diana Perche, eds. Australian politics and policy: 2024. Sydney: Sydney University Press. DOI: 10.30722/sup.9781743329542. ↵

- Vo and Archibald 2018. ↵

- Davidson 2014, 1. ↵

- Patton 2018. ↵

- Althaus, Bridgman and Davis 2018, 9. ↵

- Western Australian Government Department of Treasury 2020, 49. ↵

- Scriven 1991, 139. ↵

- Patton 2008, 39. ↵

- Australian Government Department of Finance n.d. ↵

- Owen 2006, 18. ↵

- Marjchrzek 1984. ↵

- Althaus, Bridgman and Davis 2018. ↵

- Owen 2006. ↵

- Mark, Henry and Julnes 2000. ↵

- Markiewicz and Patrick 2016, 10. ↵

- Loud and Mayne 2013. ↵

- Conley-Tyler 2005. ↵

- Australian Government Department of Finance n.d. ↵

- Productivity Commission 2020. ↵

- Stufflebeam and Coryn 2014, 54–5. ↵

- Alkin and Christie 2004. ↵

- Mertens and Wilson 2012. ↵

- Katz et al. 2016. ↵

- Pawson and Tilley 1997. ↵

- Patton 2008. ↵

- Mertens 2012, 809. ↵

- Marjchrzak 1984. ↵

- International Organisation for Cooperation in Evaluation n.d. ↵

- Yarbrough et al. 2011. ↵

- Stufflebeam and Shinkfield 2007. ↵

- Western Australian Government Department of Treasury 2020. ↵

- Patton 2008. ↵

- Patton 2008. ↵

- Weiss 1998. ↵

- Personal communication with J Scougall. ↵

- Funnell and Rogers 2011. ↵

- Funnell and Rogers 2011. ↵

- Mayne 2008. ↵

- Productivity Commission 2020. ↵

- Smith 1999. ↵

- Productivity Commission 2020. ↵

- Lowitji Institute 2022. ↵