Artificial Intelligence

Half-Human, Half-AI, Full STEM Advantage: A Centaur Approach to Open Textbook Development

James Cook University

Ben Archer

Overview

This case study documents the implementation of a Centaur approach to developing Open Education Resources (OER) at James Cook University (JCU), where artificial intelligence (AI) assistance was integrated into academic textbook authoring whilst maintaining rigorous human oversight and quality control.

JCU’s transition from semester to trimester delivery created an urgent need for new learning materials supporting mandatory internship components in postgraduate science degrees. Traditional textbook development timelines were incompatible with the compressed implementation schedule. Existing career development resources inadequately addressed the specific challenges faced by international students navigating Australian workplace cultures and professional expectations.

The Centaur approach enabled completion of The STEM Advantage: Career Planning and Internship Strategies (Archer, 2025) within five months (October 2024-February 2025). The systematic workflow incorporated AI-assisted content generation, colour-coded review processes, iterative revision cycles, and multi-phase quality assurance protocols. The textbook achieved over 700 views within six weeks of publication and was adopted across five subjects in five different degree programmes. Enhanced accessibility features, including Google Translate integration for Mandarin and Hindi, addressed the international student demographic.

The Centaur approach delivered productivity improvements of approximately 40-60% while maintaining comprehensive human oversight. Success depended on substantial upfront investment in AI training, robust quality control frameworks, and recognition that AI enhances rather than replaces human expertise. The approach proved particularly effective for addressing urgent institutional needs while creating adaptable, widely adopted educational resources that serve diverse student populations.

Using this case study

This case study is aimed at academics looking to utilise AI to assist with the development of their own OER. After reading this case study, readers should be able to:

- Design and implement a systematic AI-assisted authoring workflow by adapting the colour-coded review system and multi-phase quality assurance protocols to their own disciplinary context, enabling them to accelerate OER development whilst maintaining academic rigour and institutional standards.

- Establish effective AI training protocols and session management strategies by creating dedicated AI projects with comprehensive instructions, managing platform usage limitations, and developing sustainable feedback mechanisms that maximise productivity gains while ensuring content quality and consistency.

- Develop institutional capacity for AI-assisted OER creation by identifying prerequisite competencies, securing appropriate time allocations and technical resources, and building quality control frameworks that address the specific risks and opportunities associated with AI-generated academic content in their educational context.

Background

The development of The STEM Advantage: Career Planning and Internship Strategies for Science, Technology, Engineering and Mathematics Postgraduate Students emerged from a critical need to support international postgraduate science students at JCU undertaking internships within their degrees. This textbook project, initiated in 2024, addressed significant gaps in career development support for a predominantly international student cohort pursuing Masters degrees within the College of Science and Engineering.

The student demographic presented unique challenges: the majority were international students with limited or no Australian workplace experience within their respective industries, and most were English as Second or Other Language (ESOL) speakers. These factors created substantial barriers to successful internship engagement and career development, particularly given research indicating that students often struggle with career capability development and self-efficacy in professional contexts (Fish et al., 2025).

Recent scholarship highlights the complexity of internship motivation and outcomes. Popov (2025) demonstrates that while internships serve as occupational tasters enabling students to crystallise vocational goals, they can also delay workforce entry when students lack clear career direction. This phenomenon was particularly relevant for JCU’s international postgraduate cohort, who faced additional challenges navigating Australian workplace cultures and professional expectations.

Furthermore, Fish et al. (2025) identify that student disengagement with employability development represents a persistent challenge across higher education, with career services often accessed too late in students’ academic journey. At JCU, organisational restructures had resulted in significant waitlists for career service appointments, meaning that postgraduates were engaging with career support services at the point of near-graduation when intervention opportunities were severely limited.

The textbook creation coincided with the comprehensive redevelopment of learning materials within LearnJCU, the university’s learning management system (LMS). This synchronicity provided an opportunity to integrate AI-assisted authoring processes whilst developing culturally responsive, academically rigorous OER that could address the identified gaps in internship preparation and career development support for this specific student population.

Project description

The context

JCU was transitioning from semester to trimester delivery, which created both opportunity and urgency for updating learning materials across postgraduate science programs. This institutional change provided dedicated time for comprehensive content redevelopment.

Choosing an AI approach

To speed up this intensive content creation process, I decided to integrate AI assistance into the writing workflow. I selected Claude as the primary AI tool based on recommendations from colleagues who had successfully used similar approaches, notably Inger Mewburn and Narelle Lemon’s book Rich Academic, Poor Academic (Mewburn, 2024).

The “Centaur” method

The methodology adopted what Ethan Mollick (2024) calls a “centaur approach”—using AI to enhance existing human capabilities rather than replace them. This collaborative framework positioned AI as a writing enhancement tool rather than an autonomous content generator. This ensured that human expertise influenced each sentence in the book, meeting academic integrity and quality guidelines.

Tool-to-principle reference

To ensure this approach works with evolving technologies, Table 1 maps the specific tools used to their underlying principles, enabling readers to adapt the workflow to their available platforms:

Current Tool |

Core Principle |

Alternative Examples |

| Claude | Human-in-the-loop content generation | ChatGPT, Gemini, future AI platforms |

| Grammarly | Multi-phase automated quality assurance | ProWritingAid, institutional editing tools |

| LearnJCU | LMS-aligned content structure | Moodle, Canvas, Blackboard formats |

How the workflow worked

Step 1: Setting up the AI workspace

I developed a dedicated AI project workspace with detailed instructions that mirrored my institutional LMS requirements.

Step 2: Content input and generation

The workflow followed a systematic pattern:

- Research materials were fed into the AI platform

- Basic story frameworks illustrated key concepts

- Clear learning outcomes were defined for each chapter

- The AI system generated initial content drafts using human-in-the-loop methodology

- Drafts were transferred to standard word processing software for human revision

The colour-coded quality control system

Why I needed visual tracking

A critical feature was implementing a colour-coded review system in word processing software to ensure human oversight of AI content. This approach was based on Dell’Acqua et al.’s (2023) research showing that workers using AI without verification achieved only 60-70% accuracy, while those who critically reviewed AI outputs gained up to 43% productivity while maintaining accuracy.

How the colour system worked

The system used three different colour highlights:

- Yellow highlights: AI-generated content needing review and potential rewriting

- Red highlights: Human-authored sections requiring substantial revision

- Green highlights: Content needing grammar checking using automated tools

- No highlighting: Completed, publication-ready material

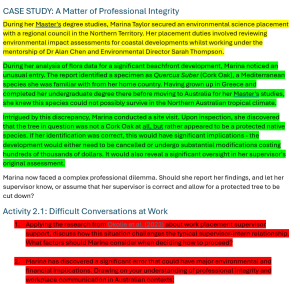

Figure 1 shows this visual tracking system in practice, demonstrating how AI content was systematically processed through different review stages.

Key takeaway: The colour-coded approach ensured comprehensive quality control while clearly separating AI-generated from human-authored content throughout the revision process.

Managing platform limits

Even with premium AI platform subscriptions, usage limitations created workflow constraints. Most conversational AI platforms restrict interaction volume within specific timeframes, creating boundaries around productivity.

The quality vs. quantity challenge

This limitation was particularly problematic when using Mollick’s approach of “bringing AI to the table” (2024, p. 47) through detailed feedback. The process involved:

- Communicating preferences about AI outputs

- Identifying what worked well and what needed improvement

- Providing enhanced guidance for subsequent iterations

Planning the sessions

This feedback approach significantly improved content quality. AI systems can adapt to specific writing preferences, institutional requirements, and teaching approaches. However, detailed feedback used substantial platform capacity, creating a trade-off between quality improvement and content volume.

The workflow required careful session management and strategic planning to balance quality benefits with quantity requirements. Platform limitations meant spreading the writing process across multiple sessions and days, disrupting creative flow and requiring time to re-establish context.

Why human error still happened

Despite rigorous review processes and two comprehensive proofreading rounds, human error remained an ongoing problem. AI platform disclaimers about potential errors were sometimes overlooked, and mistakes weren’t identified until the institutional publishing team conducted their review.

The reality of human-AI collaboration

These oversights show a critical limitation: while human-AI collaboration enhances productivity and quality compared to unverified AI use, it remains fallible and dependent on human vigilance.

This aligns with Dell’Acqua et al.’s (2023) findings, where no participant achieved 100% accuracy, regardless of verification processes. Errors persisted despite systematic review protocols, showing that human-AI collaboration is valuable but not perfect.

Multiple safety nets required

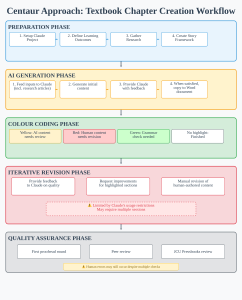

Figure 2 illustrates the systematic approach, showing how multiple verification stages create overlapping safety nets throughout the development process. The workflow incorporated multiple verification stages—from initial visual tracking through peer review and institutional proofreading—yet human errors still occurred until the final publishing review.

Bottom line: Multiple quality checks remain essential for acceptable accuracy, even with well-structured human-AI collaboration.

Copyright and intellectual property considerations

Using AI to create educational content raises important questions about who owns the work and how it fits with open education principles. This project addressed these considerations in several ways:

Who owns AI-generated content?

Under Claude’s current terms of service, users keep rights to their inputs and any outputs generated, as long as the content doesn’t violate usage policies. However, institutions should check their specific AI platform agreements since terms vary across providers and may change over time.

Maintaining human authorship

This project kept clear records of the collaborative process, with substantial human input in planning, reviewing, and revising all content. The Centaur approach ensures significant human intellectual contribution throughout, supporting traditional academic authorship principles.

Open content licensing

All materials developed through this AI-assisted process were published under a Creative Commons Attribution-NonCommercial 4.0 International license, aligning with institutional commitments to OER. The collaborative methodology supports rather than undermines open content principles by making content creation tools accessible to more educators.

Institutional policy alignment

JCU treated AI-generated content similarly to other research and writing tools, with the human author maintaining responsibility for accuracy, appropriateness, and compliance with academic integrity standards.

Key point: Clear documentation of the human-AI collaborative process supports traditional authorship principles while embracing new technology capabilities.

Key outcomes

The implementation of the human-AI collaborative approach to textbook development yielded several significant achievements that demonstrate both the efficiency and effectiveness of AI-assisted academic authoring for OER.

Accelerated development timeline

The textbook was successfully completed within a five-month development period from October 2024 to February 2025. This compressed timeline was made possible through the systematic workflow illustrated in Figure 2, where AI assistance accelerated the initial content generation phase while maintaining quality assurance processes. The timing perfectly aligned with academic calendar requirements, enabling immediate deployment at the commencement of Trimester 2, 2025.

Timeline achievement: Five months from blank page to publication-ready content, compared to my previous 13-month experience with traditional OER development.

Strong initial engagement

Within the first six weeks of publication, the textbook achieved over 700 views, indicating strong initial engagement from the target audience of postgraduate science, technology, engineering, and mathematics (STEM) students. This early adoption rate suggests that the collaborative approach successfully produced content that resonated with student needs and academic expectations.

Institutional adoption

In the months following publication, two other institutions adopted sections of the book as course materials in similar subjects, demonstrating the broader appeal and quality of the AI-assisted content.

Student feedback

Initial student feedback demonstrates strong engagement with the AI-assisted textbook. Student evaluation surveys showed marked improvement in ratings for online learning materials quality, increasing from an average of 3.76/5.00 in 2023-2024 to 5.00/5.00 in early 2025. However, this represents a limited sample as the textbook was published in May 2025, meaning comprehensive cohort feedback remains unavailable for this case study.

Cross-program integration and sustainability

The textbook’s adoption across five subjects within five different degree programs demonstrates how AI-assisted OER development can create sustainable, reusable educational resources that serve diverse learning contexts—a core principle of open educational practice (OEP). This broad implementation validates the effectiveness of the collaborative development process in creating adaptable, high-quality educational resources.

Enhanced accessibility and inclusive design

Language support

To address the predominantly international student demographic, a Google Translate widget was integrated into the platform, enabling automatic translation into Mandarin and Hindi. This accessibility feature acknowledges the linguistic diversity of the target audience while expanding the resource’s potential reach and utility for ESOL learners.

Culturally responsive content

The textbook examples and case studies were deliberately drawn from real student experiences during professional placements, with particular attention to cross-cultural workplace navigation and challenges. This approach ensures that content reflects the lived experiences of the diverse international cohort, providing culturally relevant scenarios that resonate with students from various cultural backgrounds.

Multiple learning approaches

The multi-modal design—combining structured text, visual workflow diagrams, practical examples, and step-by-step processes—accommodates different learning preferences and cognitive processing styles. The clear heading structure and navigation support students who rely on assistive technologies or prefer systematic information processing.

Socioeconomic accessibility

The open licensing and free access model removes financial barriers that might prevent students from accessing quality learning materials, supporting equity principles central to open education. This approach particularly benefits international students who may face additional financial pressures.

Areas for future development

This case study acknowledges that while linguistic and cultural diversity were addressed through translation features and culturally relevant examples, broader inclusivity frameworks could be strengthened in future iterations. This includes incorporating input from accessibility experts and diverse student voices from the design phase, rather than retrofitting inclusive features.

Open access benefits

The AI-assisted development approach supports OEP principles by:

- Democratizing content creation: Making sophisticated authoring tools accessible to individual educators

- Accelerating resource sharing: Faster development enables broader distribution

- Enhancing sustainability: Reduced development costs support long-term resource availability

Bottom line: The collaborative approach delivered a high-quality textbook in significantly less time while maintaining academic standards and achieving strong student engagement across multiple programs.

Learnings and recommendations

Recommendation 1: Choose your platform wisely

Principle/Context: Strategic AI platform selection and institutional preparation are crucial for successful OER development projects.

Application/Example: Evaluate AI tools based on conversation limits, academic writing capabilities, and institutional compatibility before committing resources. This project used Claude based on colleague recommendations, avoiding costly trial-and-error phases. JCU’s semester-to-trimester transition provided dedicated development time essential for thorough implementation without compromising existing teaching responsibilities. This approach supports OEP equity principles by enabling individual educators and smaller institutions to develop high-quality OER materials without requiring large content development teams or substantial financial resources.

Recommendation 2: Designing quality control workflows

Principle/Context: Robust quality control workflows are essential to maintain academic integrity when using AI-assisted authoring.

Application/Example: This project implemented a colour-coded review system in Word documents:

- Yellow = AI content requiring review

- Red = human sections needing revision

- Green = content needing grammar check

- Unhighlighted = finalised content

This visual system allowed multiple reviewers to track progress and safeguard against overlooked errors, while recognising that human oversight remains fallible, as demonstrated by Dell’Acqua et al.’s (2023) research showing no participants achieved perfect accuracy despite comprehensive verification processes.

Beyond accuracy, quality control processes should address potential algorithmic bias in AI-generated content, ensuring diverse perspectives and inclusive representation throughout educational materials.

Recommendation 3: Managing platform constraints

Principle/Context: AI platform limitations require strategic session management to balance output quality with production volume.

Application/Example: Even premium subscriptions impose conversation limits that can disrupt workflows. This project addressed Claude’s usage constraints by:

- Distributing content generation across multiple sessions

- Maintaining conversation logs for context continuity

- Creating template instructions for quick redeployment

- Scheduling writing sessions to align with 24-hour platform reset cycles

The balance between meaningful AI dialogue and usage capacity requires realistic timeline projections and additional time budgeting for multi-session workflows.

Recommendation 4: Foundation building through AI training

Principle/Context: Effective AI collaboration requires substantial upfront investment in system training and configuration before content generation begins.

Application/Example: This project allocated 20-30% of the timeline to AI training, including:

- Configuring Claude Projects with institutional content standards

- Providing sample academic writing and citation formats

- Establishing tone expectations for postgraduate audiences

- Creating multiple examples until output consistently aligned with requirements

This foundational investment reduced revision cycles and improved initial output quality, embodying Mollick’s (2024) emphasis on productive human-AI partnerships through explicit guidance.

Recommendation 5: Prerequisite competencies

Principle/Context: AI assistance amplifies existing human capabilities rather than compensating for absent skills or expertise.

Application/Example: Successful implementation requires educators to possess:

- Proficiency in academic writing conventions

- Deep disciplinary knowledge

- Experience in curriculum design and pedagogical approaches

Without these foundational competencies, educators cannot effectively evaluate AI outputs for accuracy, appropriateness, or alignment with learning objectives, rendering quality control processes ineffective regardless of workflow sophistication.

Recommendation 6: Establishing realistic expectations

Principle/Context: AI-assisted development accelerates timelines while still requiring comprehensive human oversight and traditional quality assurance protocols.

Application/Example: Expect significant but not revolutionary productivity gains. This project achieved a five-month development timeline compared to my previous OER experience of 13 months, suggesting potential improvements of 40-60% under similar conditions. However, variables such as scope, subject matter, and institutional support affect direct comparisons.

Regardless of timeline acceleration, comprehensive quality assurance remains essential:

- Extensive human input throughout all development phases

- Multiple revision cycles for every AI-generated section

- Peer review and institutional proofreading processes

- Traditional verification protocols to address emerging risks of AI-generated errors

Successful implementation also requires transparency about AI assistance, clear attribution practices, and acknowledgment that human oversight remains essential for identifying bias or inappropriate content.

In practice

1. Institutional readiness and resources

How does your current institutional context support or constrain AI-assisted OER development? Consider available time allocations, technical infrastructure, quality assurance protocols, and administrative support for innovative pedagogical approaches. What specific resources or policy changes would you need to implement a Centaur approach effectively?

2. Personal competency assessment

Evaluate your existing capabilities in relation to the prerequisite skills identified in this chapter. Do you possess sufficient subject matter expertise, academic writing proficiency, and pedagogical knowledge to effectively guide and evaluate AI-generated content? Where might you need additional skill development before attempting AI-assisted authoring?

3. Quality control framework design

How would you adapt the colour-coded review system and multi-phase quality assurance approach to fit your specific disciplinary requirements and institutional standards? What additional verification steps might be necessary for your subject area, and who would constitute your peer review network for content validation?

4. Student-centred implementation

Considering your specific student demographic and learning context, how would you modify the workflow to address their particular needs? What accessibility features, cultural considerations, or pedagogical approaches would you incorporate to ensure the AI-assisted content serves your students effectively while maintaining academic rigour?

Further reading and resources

Archer, B. (2025). The STEM Advantage: Career Planning and Internship Strategies for Science, Technology, Engineering and Mathematics Postgraduate Students (1st ed.). JCU Open eBooks. https://doi.org/10.25120/awhr-v2e7

References

Archer, B. (2025). The STEM Advantage: Career Planning and Internship Strategies for Science, Technology, Engineering and Mathematics Postgraduate Students (1st ed.). JCU Open eBooks. https://doi.org/10.25120/awhr-v2e7

Dell’Acqua, F., McFowland, E. I., Mollick, E., Lifshitz-Assaf, H., Kellogg, K. C., Rajendran, S., Krayer, L., Kandelon, F., & Lakhani, K. R. (2023). Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality, Working Paper, Nos. 24–013; p. 53. Harvard University. https://www.hbs.edu/faculty/Pages/item.aspx?num=64700

Fish, N., Bertone, S., & van Gramberg, B. (2025). Improving student engagement in employability development: Recognising and reducing affective and behavioural barriers. Studies in Higher Education. https://doi.org/10.1080/03075079.2025.2461271

Mewburn, I. (2024, May 30). We wrote a 36,000 word book in a single weekend (yes, really). The Thesis Whisperer. https://thesiswhisperer.com/2024/05/01/we-wrote-a-36000-word-book-in-a-single-weekend-yes-really/

Mollick, E. (2024). Co-Intelligence: Living and Working with AI (1st ed.). WH Allen.

Popov, J. (2025). Motivation in internship: Working to learn and learning to work. Journal of Vocational Education & Training, 0(0), 1–21. https://doi.org/10.1080/13636820.2025.2462957

Image descriptions

Figure 1: Colour-Coded Quality Control System

Document titled “A Matter of Professional Integrity” describing Marina Taylor’s ethical dilemma during her Master’s degree in environmental science placement.

The document contains three highlighted sections: yellow highlighting indicates AI-generated content needing rewriting (covering Marina’s background and placement details),

green highlighting shows content ready for grammatical adjustment (describing her discovery of a misidentified tree species), and red highlighting marks human-generated content

requiring rewriting (covering the professional implications and an activity about difficult workplace conversations). The case study asks whether Marina should report her findings

about a protected native species being incorrectly identified as Cork Oak, which could halt a major coastal development project.

[Return to Figure 1]

Figure 2: Centaur Approach: Textbook Chapter Creation Workflow

Flowchart titled “Centaur Approach: Textbook Chapter Creation Workflow” showing a five-phase process for creating educational content using AI assistance.

The workflow progresses through colour-coded phases: blue Preparation Phase (setup, learning outcomes, research, story framework), orange AI Generation Phase

(feeding inputs to Claude, generating content, providing feedback, copying to Word), green Colour Coding Phase (highlighting content by type – yellow for AI content needing review,

red for human content needing revision, green for grammar checks, no highlight for finished content), pink Iterative Revision Phase (feedback cycles with Claude,

with a warning about usage restrictions), and grey Quality Assurance Phase (proofreading, peer review, JCU Pressbooks review, with a note that human errors may still occur despite

multiple checks.

[Return to Figure 2]

Acknowledgement of peer reviewers

The author gratefully acknowledges the following people who kindly lent their time and expertise to provide peer review of this chapter:

- Dr Ala Barhoum, Visiting Fellow, Australian National University, Centre for Learning and Teaching

- Rhiannon Hall, Education & Students Content & Communications Coordinator, University of Technology Sydney

How to cite and attribute this chapter

How to cite this chapter (referencing)

Archer, B. (2025). Half-human, half-AI, full STEM advantage: A Centaur approach to open textbook development. In Open Education Down UndOER: Australasian Case Studies. Council of Australian University Librarians. https://oercollective.caul.edu.au/openedaustralasia/chapter/half-human-half-ai/

How to attribute this chapter (reusing or adapting)

If you plan on reproducing (copying) this chapter without changes, please use the following attribution statement:

Half-human, half-AI, full STEM advantage: A Centaur approach to open textbook development by Ben Archer is licensed under a Creative Commons Attribution 4.0 International licence.

If you plan on adapting this chapter, please use the following attribution statement:

*Title of your adaptation* is adapted from Half-human, half-AI, full STEM advantage: A Centaur approach to open textbook development by Ben Archer, used under a Creative Commons Attribution 4.0 International licence.