13 Two Sample Tests for Means

Learning Outcomes

At the end of this chapter you should be able to:

- identify the difference between a paired samples and independent samples test;

- conduct a hypothesis test for paired samples;

- conduct a hypothesis test for two independent samples;

- compute the pooled variance for two independent samples;

- verify assumptions for the models used in the hypothesis tests;

- compute confidence intervals for a single mean and a difference of means.

Contents

13.1 Paired samples t-test test

13.2 Independent samples t-test

13.3 Summary

13.1 Paired Samples t-test

Consider an experiment in which there is one treatment and one control group. Differences between the profile of subjects in each group can cause problems.

Example 13.1 A small clinical trial

Four patients with a rare disease are recruited to a clinical trial.

The patient’s characteristics are as follows.

| Patient | Age | Sex |

|---|---|---|

| A | 71 | M |

| B | 68 | M |

| C | 28 | F |

| D | 34 | F |

Two patients are to be assigned to the treatment group, and two to the control group. If we did this completely at random, we could get patients A and B in the treatment group, and C and D in the control group. This could cause problems.

- Suppose that the disease tends to be worse in men than women. Then the outcomes for the treatment and control group may be very similar even though the treatment is beneficial, since the benefits are counterbalanced by the increased acuteness of the disease in men.

- The same argument could also be made in terms of age rather than sex.

- Suppose that the treatment group do have better outcomes than the control group. It will be impossible to say whether this is due to the treatment, or due to the fact that the disease tends to be worse in women (or in the young).

We can use a Matched Pairs design to adjust for these differences. The idea is to select experimental units in pairs that are `matched’ on potentially important characteristics, such as subject age and sex in a clinical trial. We then randomly assign one member of each pair to the treatment group, and the other member of the pair to the control group. The result is a treatment group and a control group that are very similar in terms of important characteristics of the experimental units (i.e. the variability between subjects is controlled).

In the clinical trial example, patients A and B form a matched pair, as do patients C and D. We should randomly assign A and B to different groups, and C and D to different groups.

It is often possible to `match’ an individual to him/herself, and look at results under two different experimental conditions (e.g. before and after some treatment).

Example 13.2

Twenty students studying European languages took a two week intensive course in spoken German. To assess the effectiveness of the course, each student took a spoken German test before the course, and an equivalent test after the course. The before and after test scores on each student are paired observations.

The model

We have two populations with means ![]() . A sample from each population is selected, where the samples have a natural pairing. Often each pair of observations is on the same unit, as in “before” and “after” experiments.

. A sample from each population is selected, where the samples have a natural pairing. Often each pair of observations is on the same unit, as in “before” and “after” experiments.

The hypotheses of interest are:

![]()

against one of the alternatives,

![]()

depending upon the question of interest.

Let ![]() and

and ![]() be the samples from the two populations with means

be the samples from the two populations with means ![]() and

and ![]() respectively, with pairs

respectively, with pairs ![]() . Let

. Let ![]() be their difference,

be their difference, ![]() , and let

, and let ![]() be the mean and

be the mean and ![]() be the standard deviation of this difference. Then the standardised sample mean

be the standard deviation of this difference. Then the standardised sample mean

![]()

where

- the distribution is exact if the

are normally distributed;

are normally distributed; - the distribution is approximate if the

are not normally distributed AND the sample size is large (

are not normally distributed AND the sample size is large ( .

.

The hypothesis test is now based on the differences. The hypothesis of interest in terms of ![]() and

and ![]() is expressed in terms of

is expressed in terms of ![]() , as below.

, as below.

![]()

against one of the alternative hypotheses:

![]()

![]()

![]()

Note that the order of subtraction is important as it determines the alternative hypothesis.

Differencing has reduced the data set to just one sample, so the test now proceeds exactly as the one-sample t-test.

Example 13.3

The presence of trace metals in drinking water not only affects the flavor but an unusually high concentration can pose a health hazard. Measurements of zinc concentrations (in parts per billion (ppb))were taken at ten locations in bottom water and surface water. Does the data suggest that the true average concentration in the bottom water exceeds that of surface water?

| Location |

1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Zinc Concentration

in bottom water |

0.430 | 0.266 | 0.567 | 0.531 | 0.707 | 0.716 | 0.651 | 0.589 | 0.469 | 0.723 |

| Zinc Concentration in

surface water |

0.415 | 0.238 | 0.390 | 0.410 | 0.605 | 0.609 | 0.632 | 0.523 | 0.411 | 0.612 |

| Difference

|

0.015 | 0.028 | 0.177 | 0.121 | 0.102 | 0.107 | 0.019 | 0.066 | 0.058 | 0.111 |

![]()

Solution

The hypotheses of interest are

![]()

where ![]() is the mean difference in the zinc concentrations between the bottom water and surface water.

is the mean difference in the zinc concentrations between the bottom water and surface water.

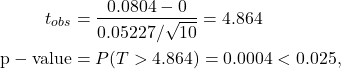

The test statistic is

![]()

Since the sample size is small we need to assume that the differences are normally distributed.

1-pt(4.864,9)

[1] 0.0004454422

so there is conclusive evidence against the null hypothesis. We conclude that the mean zinc concentration in the bottom water is greater than that in the surface water.

A 95% confidence interval for the mean difference in zinc concentrations is (using the ![]() value)

value)

![]()

Note that this interval does not contain 0, consistent with our hypothesis test that the mean difference in concentrations is positive.

Example 13.4

A bank wants to know if omitting the annual credit card fee for customers would increase the amount charged on its credit cards. The bank makes this no-fee offer to a random sample of 250 of its customers who use its credit cards. It then compares how much these customers charged this year with the amount they charged last year. The mean increase is $350 and the standard deviation of the change is $1200.

(a) Is there significant evidence at the 1% level that the mean amount charged has increased under the no-fee offer? State the appropriate hypotheses and carry out a hypothesis test, clearly stating your conclusion. State any assumptions required for the analysis to be valid.

(b) What are the consequences for the bank of a Type I error here?

(c) Compute a 95% confidence interval for the mean increase in the amount charged.

Solution

Let the random variable ![]() denote the increase in amount charged. Then we assume

denote the increase in amount charged. Then we assume ![]() , where

, where ![]() is the mean increase. From the data,

is the mean increase. From the data,

![]()

(a) The hypotheses of interest are

![]()

The test statistic is

![]()

The observed value of the test statistic is

![]()

The p-value is

![]()

so there is overwhelming evidence against the null hypothesis. We conclude that the omitting the credit card fee has increased the mean amount charged.

(b) A Type I Error would occur if the null hypothesis is rejected when it is true. In this case the bank would incorrectly conclude that the mean amount charged has increased under the no fee initiative when it has not. This may cost the bank in the fee without any benefit.

(c) 95% CI is

![]()

where 1.9695 is the 2.5% critical value of the ![]() distribution.

distribution.

13.2 Independent samples t-test

Now the two samples are independent, and there is no natural pairing. Also, often the samples are of different sizes. An example is: investigating the difference in salary between male and female executives.

Population 1: mean ![]() , variance

, variance ![]()

Sample 1: sample size ![]() , mean

, mean ![]() , variance

, variance ![]()

Population 2: mean ![]() , variance

, variance ![]()

Sample 2: sample size ![]() , mean

, mean ![]() variance

variance ![]()

The model

The model equation is

![]()

where ![]() is observation

is observation ![]() from population

from population ![]() ,

, ![]() .

.

So, ![]() denotes population 1, and the model equation becomes

denotes population 1, and the model equation becomes

![]()

![]() denote the observations from the first sample, and

denote the observations from the first sample, and ![]() denote the observations from the second sample.

denote the observations from the second sample.

Assume that the two samples are from normal populations.

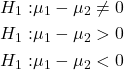

Hypotheses

The hypotheses are

![]()

versus one of

NOTE:

The order of subtraction is important and depends on the question of interest.

Model assumptions

The model has the following assumptions.

- The individual samples are from normally distributed populations.

- The variance of the two populations are equal (or homogeneous). This is also referred to as homoscedacity.

- Each sample is independent and the two samples are independent of each other.

We will discuss later how to verify these assumptions.

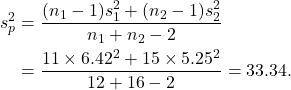

Pooled variance

Note that the sample means may be different. We have assumed ![]() , that is, the two populations have a common variance. In practice

, that is, the two populations have a common variance. In practice ![]() and

and ![]() are unknown and need to be estimated. We estimate this common variance by the pooled variance. Since the two sample means may be different, but the variances are (assumed) equal, we compute the sum of squares about each mean separately, and then weight these by the sample sizes. The pooled variance is given by

are unknown and need to be estimated. We estimate this common variance by the pooled variance. Since the two sample means may be different, but the variances are (assumed) equal, we compute the sum of squares about each mean separately, and then weight these by the sample sizes. The pooled variance is given by

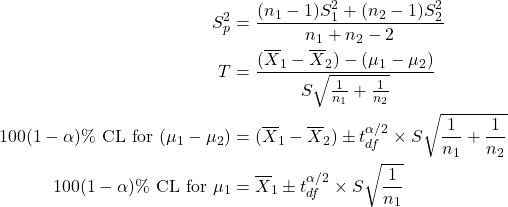

![]()

Test statistic

Using our results from sampling, the standardised difference in sample means is

![Rendered by QuickLaTeX.com \[Z = \frac{(\overline X_1-\overline X_2) - (\mu_1-\mu_2)}{\sqrt{\frac{\sigma_1^2}{n_1}+\frac{\sigma_2^2}{n_2}}} \sim {\rm N}(0,1)\]](https://oercollective.caul.edu.au/app/uploads/quicklatex/quicklatex.com-957f1bd5b841139e0ca0678df41fe9a9_l3.png)

where the distribution is exact if the populations are normal, and only approximate if the populations are not normal AND each sample size is at least 30.

Since we are assuming the population variances are equal, we replace the variances by ![]() , so this gives

, so this gives

![Rendered by QuickLaTeX.com \[Z = \frac{(\overline X_1-\overline X_2) - (\mu_1-\mu_2)}{\sigma\sqrt{\frac{1}{n_1}+\frac{1}{n_2}}} \sim \N(0,1)\]](https://oercollective.caul.edu.au/app/uploads/quicklatex/quicklatex.com-9749fdea2c9cf0a652bea944d275f05d_l3.png)

Finally, we approximate this common variance ![]() by the pooled sample variance

by the pooled sample variance ![]() , and this gives

, and this gives

![Rendered by QuickLaTeX.com \[T = \frac{(\overline X_1-\overline X_2) - (\mu_1-\mu_2)}{S\sqrt{\frac{1}{n_1}+\frac{1}{n_2}}} \sim t_{n_1+n_2-2}\]](https://oercollective.caul.edu.au/app/uploads/quicklatex/quicklatex.com-5c867fabaabc1f93dbbdd1210de9e5e3_l3.png)

NOTE:

The order of subtraction is important, and depends on the hypotheses.

Example 13.5

In a medical study, 12 men with high blood pressure were given calcium supplements, and 16 men with high blood pressure were given placebo. The reduction in blood pressure (in mm Hg) over a ten week period was measured for both groups. The summary statistics for the first (i.e. treatment) group were

![]()

and for the second (i.e. control group) were

![]()

Is the reduction in blood pressure greater for the group who received calcium supplements?

Solution

Let ![]() and

and ![]() denote the mean decrease in blood pressure for the treatment (calcium) and control (placebo) groups respectively. The hypotheses of interest are:

denote the mean decrease in blood pressure for the treatment (calcium) and control (placebo) groups respectively. The hypotheses of interest are:

![]()

First we estimate the pooled variance.

The observed value of the test statistic is then

![Rendered by QuickLaTeX.com \[t_{obs} = \frac{(\overline x_1-\overline x_2) - (\mu_1-\mu_2)}{s\sqrt{\frac{1}{n_1}+\frac{1}{n_2}}} = \frac{4.10-0.03}{\sqrt{33.34}\sqrt{\frac{1}{16}+\frac{1}{12}}}= 1.846.\]](https://oercollective.caul.edu.au/app/uploads/quicklatex/quicklatex.com-1e77472e27389111a574d1283deb7b2b_l3.png)

The p-value of the test is ![]() , so there is insufficient evidence to reject the null hypothesis. We concluded that there is no significant difference in mean blood pressure reduction for the two groups.

, so there is insufficient evidence to reject the null hypothesis. We concluded that there is no significant difference in mean blood pressure reduction for the two groups.

Conclusion: Calcium supplements do not reduce blood pressure significantly.

Equal Variance assumption

The equal variance assumption can be verified by using one of Bartlett’s or Levene’s tests, available in R. Note that these tests require the original data.

Another informal way to verify this assumption is that the ratio of the larger variance to the smaller should not be greater than 2. In the last example,

![]()

so there is no reason to doubt the equal variance assumption.

Confidence Interval for a Difference on Population Means

The general form of a confidence interval for a parameter ![]() is

is

![]()

where

is a point estimate of

is a point estimate of  ;

; is the

is the  level critical value. (Note that the distribution from which this critical

level critical value. (Note that the distribution from which this critical

value can be different, depending on what is being estimated. We will always need just the or normal distributions.)

or normal distributions.) is the standard error of the point estimate

is the standard error of the point estimate  .

.

The confidence interval is a simple matter of unravelling the test statistic,

![Rendered by QuickLaTeX.com \[T = \frac{(\overline X_1-\overline X_2) - (\mu_1-\mu_2)}{S\sqrt{\frac{1}{n_1}+\frac{1}{n_2}}}\]](https://oercollective.caul.edu.au/app/uploads/quicklatex/quicklatex.com-ad9448f41cf032ffee1a0399f6d823ec_l3.png)

If we write the expression for ![]() from this we get

from this we get

![]()

where the ![]() , the denominator for the estimate of the variance.

, the denominator for the estimate of the variance.

Note

- We used the

distribution in this case, because the denominator for the estimate of variance here was

distribution in this case, because the denominator for the estimate of variance here was

- This same t-distribution will be used whenever a t-distribution is needed in this context.

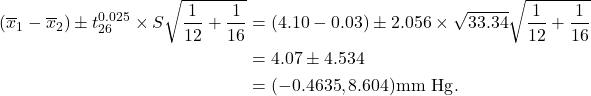

Example 13.5 (ctd)

The df for the t-distribution is ![]() . The corresponding 2.5% critical value for the

. The corresponding 2.5% critical value for the ![]() distribution is 2.056. Then a 95% CI for

distribution is 2.056. Then a 95% CI for ![]() is given by

is given by

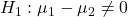

Note

- This interval includes 0. Thus at the 5% level of significance we will not reject

![Rendered by QuickLaTeX.com \[H_0: \mu_1-\mu_2=0\]](https://oercollective.caul.edu.au/app/uploads/quicklatex/quicklatex.com-009a7cfdd08760c2f1ba59fc08bb83c5_l3.png)

in favour of

and at the 2.5% level of significance we will not reject

and at the 2.5% level of significance we will not reject![Rendered by QuickLaTeX.com \[H_0: \mu_1-\mu_2=0\]](https://oercollective.caul.edu.au/app/uploads/quicklatex/quicklatex.com-009a7cfdd08760c2f1ba59fc08bb83c5_l3.png)

in favour of

![Rendered by QuickLaTeX.com \[H_1: \mu_1-\mu_2 > 0\]](https://oercollective.caul.edu.au/app/uploads/quicklatex/quicklatex.com-81e577a98ece987c3c6e389afe44fed3_l3.png)

(or the left-sided test).

- If the interval not does include 0, then at the 5% level of significance we will reject

![Rendered by QuickLaTeX.com \[H_0: \mu_1-\mu_2=0\]](https://oercollective.caul.edu.au/app/uploads/quicklatex/quicklatex.com-009a7cfdd08760c2f1ba59fc08bb83c5_l3.png)

against

![Rendered by QuickLaTeX.com \[H_1: \mu_1-\mu_2 \neq 0.\]](https://oercollective.caul.edu.au/app/uploads/quicklatex/quicklatex.com-a10385af73891a0bc0c7cd423feb7524_l3.png)

- This establishes the relationship between hypothesis tests and confidence intervals.

Confidence Interval for a Single Mean

A confidence interval for a single mean is given by:

![]()

Note: Be careful!

- Note that this has a similar form to the CI for a single sample mean.

- However, the pooled standard deviation is used, as it is a better estimate of population standard deviation.

- Consequently, the corresponding df for the t-distribution is

. So the same critical value is used.

. So the same critical value is used. - But, the denominator is

, the sample size corresponding to

, the sample size corresponding to  .

.

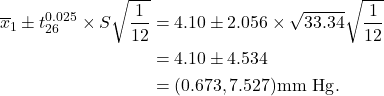

Example 13.5 (ctd)

The df for the t-distribution is ![]() . The corresponding 2.5% critical value for the

. The corresponding 2.5% critical value for the ![]() distribution is 2.056. Then a 95% CI for

distribution is 2.056. Then a 95% CI for ![]() is given by

is given by

Exercise

Compute a 95% confidence interval for ![]() for this example. You will find that there is significant overlap between the confidence intervals for

for this example. You will find that there is significant overlap between the confidence intervals for ![]() and

and ![]() . This provides evidence that the two means are not different at the 5% level of significance.

. This provides evidence that the two means are not different at the 5% level of significance.

Example 13.6

Severe idiopathic respiratory distress syndrome (SIRDS) is a serious condition that can affect newborn infants, often resulting in death. The table below gives the birth weights (in kilograms) for two samples of SIRDS infants. The first sample contains the weights of 12 SIRDS infants that survived, while the second sample contains the weights of 14 infants that died of the condition.

| Survived | 1.130 | 1.680 | 1.930 | 2.090 | 2.700 | 3.160 | 3.640 | 1.410 | 1.720 | 2.200 | 2.550 | 3.005 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Died | 1.050 | 1.230 | 1.500 | 1.720 | 1.770 | 2.500 | 1.100 | 1.225 | 1.295 | 1.550 | 1.890 | 2.200 | 2.440 | 2.730 |

It is of interest to medical researchers to know whether birth weight influences a child’s chance of surviving with SIRDS. Define population 1 to be the population of SIRDS infants that survive, with mean ![]() and variance

and variance ![]() . Let population 2 be the population of non-surviving SIRDS infants, with mean

. Let population 2 be the population of non-surviving SIRDS infants, with mean ![]() and variance

and variance ![]() .

.

We will address the following questions.

(a) Is there significant evidence that the population mean birth weights differ for survivors and non-survivors?

(b) Compute a 95% confidence interval for the difference between the population means.

Solution

(a) We shall test

![]()

We now need to decide whether or not to assume equal population variances. The sample standard deviations are 0.758 for group 1 (survived) and 0.554 for group 2 (died). The ratio of larger to smaller is ![]() , suggesting the an assumption of common variance is not too unreasonable.

, suggesting the an assumption of common variance is not too unreasonable.

We shall conduct the test in R. The commands and output are below.

survived <- c(1.130,1.680,1.930,2.090,2.700,3.160,3.640,1.410,1.720,2.200,2.550,3.005) died <- c(1.050,1.230,1.500,1.720,1.770,2.500,1.100,1.225,1.295,1.550,1.890,2.200,2.440,2.730)

(ttest <- t.test(survived, died, var.equal = TRUE))

Two Sample t-test

data: survived and died

t = 2.0929, df = 24, p-value = 0.04711

alternative hypothesis: true difference in means is not equal to 0

95 percent confidence interval:

0.007461898 1.071228578

sample estimates:

mean of x mean of y

2.267917 1.728571

The observed value of the t-statistic is ![]() , which gives a p-value of

, which gives a p-value of ![]() . We have sufficient evidence to reject

. We have sufficient evidence to reject ![]() at a 0.05 significance level. Note that the evidence is marginal.

at a 0.05 significance level. Note that the evidence is marginal.

(b) A 95% confidence interval for ![]() is

is

![]()

Note that this confidence lies above 0. This indicates that ![]() , that is the mean weight of surviving children is different from those who did not survive. From the data, the mean weight of surviving children is higher.

, that is the mean weight of surviving children is different from those who did not survive. From the data, the mean weight of surviving children is higher.

Summary

- Paired t-test: Difference the data and perform a one-sample t-test on the differences.

- Independent samples t-test