19 Prompt Engineering

Prompt engineering, in contrast to prompt design, is a more technical and systematic approach that utilises principles researched and tested by computer scientists. It goes beyond basic prompt formulation by employing advanced techniques to optimise a model’s performance.

Prompt engineering requires an understanding of the underlying behaviour of models. It uses structured patterns and syntax to improve response accuracy and efficiency. While prompt design often emphasises user experience and communication clarity, prompt engineering involves deeper technical refinement to maximise the model’s potential in complex situations.

This section outlines the techniques and demonstrates how to apply the patterns. You may have already begun using some of these techniques. Importantly, it is a great idea to experiment with them, combine them, and integrate them into your iterative approach to prompt creation.

Zero-shot Prompting

Zero-shot Prompting

Early examples in this book demonstrate zero-shot prompts. This technique allows an AI model to receive a prompt without any prior examples or context to guide its response. Instead of being presented with examples of the desired output format, the model must rely solely on its pre-trained knowledge to generate an answer. This method is advantageous when using well-trained language models that possess extensive general knowledge and can infer patterns from context

Zero-shot prompting excels at straightforward tasks such as answering factual questions, summarising text, or making logical inferences. However, it may encounter difficulties with more intricate or domain-specific requests that require examples or additional context clarification.

An example of a zero-shot prompt is asking a model to summarise case law. This prompt assumes that the model already understands what the judgment entails and the instructions for summarising it.

Summarise the key legal principles and significance of the case Mabo v Queensland (No 2) in Australian land law.

This is a zero-shot prompt because it provides no examples or structured guidance for how the model should generate its response. Instead, the model must rely entirely on its pre-existing knowledge of the judgment to summarise the key legal principles from the decision and explain their significance. Furthermore, the model is expected to infer the appropriate level of legal detail without additional instructions. For legal practitioners, zero-shot prompting may assist in explaining legal doctrines or analysing legislation without requiring prior examples. However, this assumes that the model is trained on legal information.

Zero-shot prompting tends to be more effective with fine-tuned LLMs.[1] Moreover, models trained using reinforcement learning from human feedback often demonstrate improved performance with zero-shot prompts. Reasoning models, including OpenAI’s o-family training via reinforcement learning, therefore, excel with zero-shot prompting.

Few-shot prompting

Few-shot prompting

While LLMs can perform many tasks, including examples in the prompt, can help the model understand the specific format, style, or criteria you want it to follow

Few-shot prompting is a technique where a model receives a prompt along with a few examples to guide its response. Unlike zero-shot prompting, which depends entirely on the model’s pre-trained knowledge, few-shot prompting offers context by illustrating how similar queries should be answered. This approach helps the model recognise patterns, adapt its tone and structure, and produce more accurate or contextually relevant responses. For instance, a few-shot prompt in legal applications might feature multiple case summaries with analyses before asking the AI to summarise a new case in a similar format. This method is beneficial for handling complex, nuanced, or domain-specific tasks, where the model benefits from seeing structured examples before generating an output.

To elaborate on the earlier example, a few-shot prompt for summarising case law would consist of an example and a preferred output format.

Summarise the key legal principles and significance of the case Mabo v Queensland (No 2) in Australian land law. Follow the format of the examples provided below.”

Example:

Case: Donoghue v Stevenson (1932)

Legal Principles: Established the modern law of negligence and the duty of care principle, where manufacturers owe a duty to consumers to prevent foreseeable harm.

Significance: This case introduced the “neighbour principle” to establish when a duty of care might arise. Which became the foundation of negligence law in common law jurisdictions, including Australia.

Task:

Case: Mabo v Queensland (No 2) (1992)

Legal Principles: [complete this section]

Significance: [complete this section]

Brown et al. observed that LLMs learned to perform a task by providing just one example (i.e., a 1-shot approach).[2] More difficult tasks required an increase in the number of examples (e.g., 3-shot, 5-shot, 10-shot, etc.). However, where the task requires more complex reasoning, few-shot prompting may not be reliable.

While few-shot prompting effectively guides a model’s response, it has several limitations. First, it relies on the model correctly interpreting the provided examples, which may not always ensure precise or contextually accurate outputs, especially in complex or nuanced matters. Min et al. have shown that there are additional tips/tricks when doing few-shot prompts.[3] Additionally, the model may overfit the given examples, leading to rigid or overly formulaic responses rather than adaptive reasoning. Few-shot prompting also depends on the quality and diversity of the examples. Poorly chosen or biased samples can skew the results. Moreover, models have token limitations, meaning that lengthy legal analyses or case law summaries, including multiple examples, may restrict the depth of the final response. Finally, unlike fine-tuned models, few-shot prompting does not enhance the model’s long-term learning; each session begins anew without retaining prior refinements.

Prompt Chaining

Prompt Chaining

Prompt chaining is a technique that breaks down complex tasks into a sequence of smaller, interconnected prompts. Each prompt’s output serves as input for the next prompt in the chain. This approach enables more systematic problem-solving and can help manage complex tasks that may be too large or nuanced for a single prompt. Each step in the chain can be refined independently, making the process more controllable and the results more reliable.

Anthropic describes the advantages to include:[4]

- Accuracy: Each subtask gets the model’s full attention, reducing errors.

- Clarity: Simpler subtasks mean more explicit instructions and outputs.

- Traceability: Easily pinpoint and fix issues in your prompt chain.

Anthropic also provide a legal example:[5]

You’re our Chief Legal Officer. Review this SaaS contract for risks, focusing on data privacy, SLAs, and liability caps.

{{CONTRACT TEXT}}

Output your findings in <risks> tags.

- Data Privacy (Section 7):

– Issue: Vendor has broad rights to use client data for “service improvement.”

– Risk: Could lead to unauthorized data sharing or monetization of client data.

– Impact: Potential breach of client trust, regulatory fines (GDPR, CCPA). - SLAs (Section 5):

– Issue: 99.5% uptime guarantee is below industry standard (typically 99.99%).

– Risk: Up to 43.8 hours of downtime per year vs. 52.6 minutes at 99.99%.

– Impact: Operational disruptions, revenue loss, client dissatisfaction. - Liability (Section 9):

– Issue: Liability cap set at 3 months of fees.

– Risk: Inadequate for potential data breaches, IP theft, or prolonged service failures.

– Impact: Insufficient compensation for damages that could far exceed the cap, like class-action lawsuits or regulatory fines.

Prompt 2

Draft an email to a SaaS product vendor outlining the following concerns and proposing changes. Here are the concerns:

{{CONCERNS}}

Dear {{VENDOR}},

After a thorough review of the proposed SaaS agreement, our legal team has identified several high-priority concerns that must be addressed before we can proceed:

- Data Privacy (Section 7):

– Current: Broad rights to use client data for “service improvement.”

– Concern: Potential unauthorized data sharing or monetization.

– Proposed Change: Limit data use to direct service provision. Any use for improvement, analytics, or marketing must be opt-in, revocable, and anonymised. - SLAs (Section 5):

– Current: 99.5% uptime guarantee.

– Concern: Up to 43.8 hours of downtime per year, risking operational disruptions.

– Proposed Change: 99.99% uptime with tiered service credits. - Liability (Section 9):

– Current: Cap at 3 months of fees.

– Concern: Grossly inadequate for data breaches, IP theft, or prolonged outages.

– Proposed Change: 12 months’ fees for general liability. Uncapped for data breaches, willful misconduct, IP infringement.

These changes are non-negotiable for us. They align with industry standards and our commitment to data security, operational excellence, and fiduciary responsibility. We value the potential of our partnership, and addressing these will set a strong foundation.

Please have your legal team review and incorporate these changes. We’re happy to discuss further to ensure mutual understanding and agreement.

Best regards,

Prompt 3

Your task is to review an email and provide feedback. Here is the email:

{{EMAIL}}

Give feedback on tone, clarity, and professionalism.

- Tone:

– Professional and assertive without being confrontational.

– Use of “non-negotiable” is strong but justified given the criticality of the issues.

– Collaborative language (“we value,” “happy to discuss”) balances the firmness, maintaining a constructive tone. - Clarity:

– Excellent structuring: Current state, concern, and proposed change for each issue.

– Technical terms (e.g., “EBITDA,” “tiered service credits”) are appropriate for a B2B SaaS context.

– Action items are clear: vendor’s legal team to review and incorporate changes. - Professionalism:

– Focuses on mutual benefit and industry standards, not just our demands.

– Mentions broader responsibilities (data security, operational excellence, fiduciary duty), elevating the discussion.

– Offers further discussion, showing commitment to understanding and resolving issues.

Overall, the email strikes the right balance of assertiveness and collaboration. It clearly articulates our concerns, proposes specific solutions, and maintains a professional, partnership-oriented tone. No revisions needed; proceed with sending.

Chaining works well when using text-based context with a large text document. An initial prompt that provides a document can extract relevant information (e.g., quotes, data, and important details). The second chained prompt can then utilise the extracted information to answer the desired questions from the original document. This is particularly beneficial in a legal research use case to analyse the factors that led to a judge’s decision.

Chain-of-Thought Prompting

Chain-of-Thought Prompting

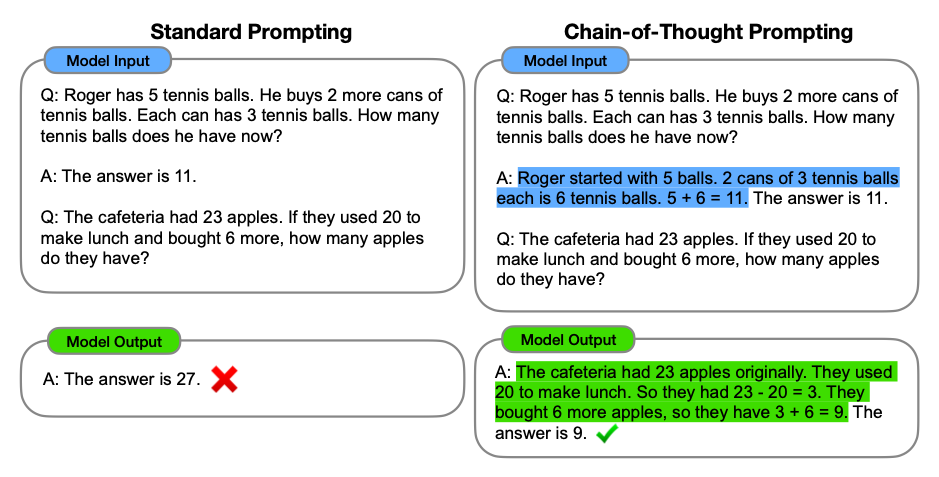

Chain-of-thought prompting enhances the reasoning capabilities of LLMs by guiding them to generate intermediate steps that lead to a final answer.[6] This method enables models to tackle complex, multi-step problems more effectively by prompting them to elicit multi-step reasoning. In mathematical problem-solving, chain-of-thought prompting encourages a model to break down calculations in a sequential manner. This structured reasoning improves accuracy and provides transparency into the model’s decision-making process, making it easier to trace and understand how specific conclusions are reached. This approach lies at the core of many newer strategies and is reflected in the ‘reasoning’ models.

An example prompt can be:

Under Australian law, can a landlord legally evict a tenant without notice?

Please consider relevant statutes and legal principles. Think step by step before providing your conclusion.

This prompt does not provide examples but instructs the model to ‘think step by step’, encouraging it to logically analyse the legal question before forming a conclusion. This method helps the user understand the structured reasoning process and evaluate the model’s assumptions.

Google researchers first described a chain of thought with the following example. The reasoning steps are highlighted in blue.

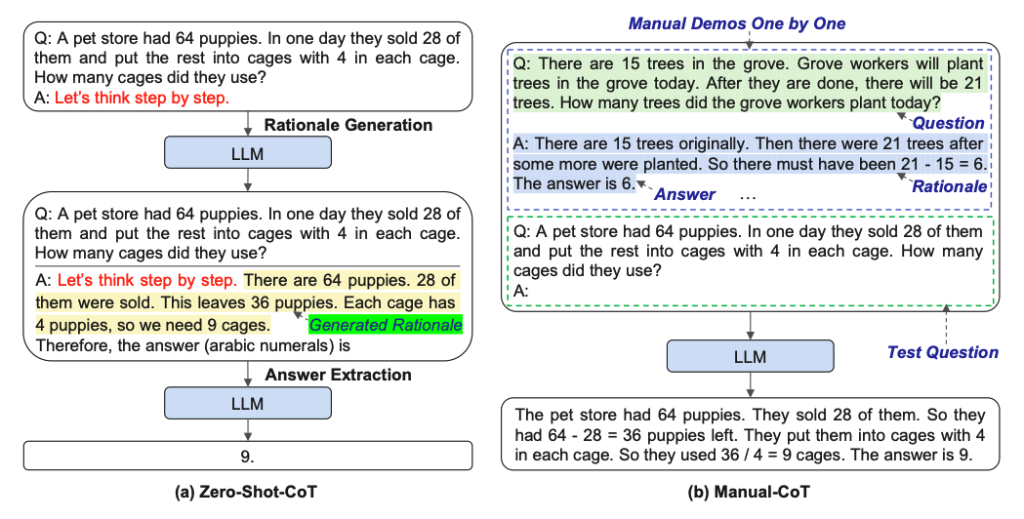

There are several ways to prompt a model using a chain-of-thought approach. The first is a zero-shot chain-of-thought, where you prompt the model to ‘think step by step’ or ‘let’s solve this step by step’. You can get the model to explicitly show its reasoning process. Yu, Quartey, and Schilder provide the following example of zero-shot chain-of-thought prompting in legal contexts.[7]

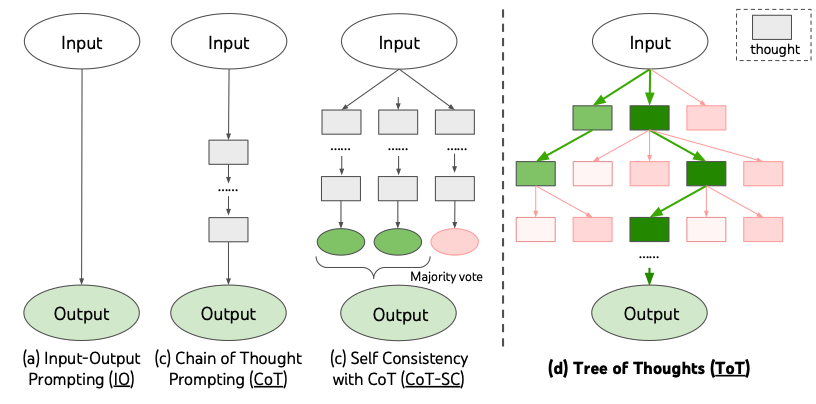

The other approach, known as manual chain-of-thought, involves the user demonstrating the reasoning by providing the model with an example. Zhang et al. compare these approaches in the diagram below.[8]

An example manual chain-of-thought prompt could be:

Under Australian trade mark law, can a business use the name “Mr Nike”, which is a similar name to a registered trade mark ‘Nike’ without infringing? Follow the structure of the examples provided below and think step by step before providing a conclusion.

Example: Trade Mark Infringement in Australia

Legal Question: Can a business use a similar name to a registered trade mark without infringing?

Step 1: Identify the Legal Framework

Trade mark law in Australia is governed by the Trade Marks Act 1995 (Cth). Trade mark registration provides exclusive rights to use the mark as registered in connection with specified goods and services.

Step 2: Consider General Trade Mark Protections

Section 120 of the Trade Marks Act 1995 (Cth) states that a registered trade mark is infringed if an unauthorised party uses a sign as a trade mark that is substantially identical or deceptively similar to the registered trade mark in relation to goods or services in respect of which the trade mark is registered or are of the same, the same description or closely related goods/services.

Step 3: Examples Where Use is Allowed

Use of a similar mark may be lawful in cases such as:

- Different industries – If the goods/services are unrelated (e.g., “Eagle Tech” for software vs. “Eagle Cafe” for food services).

- Descriptive use. If the name is used descriptively rather than as a trade mark (e.g., using “smart” in a generic sense).

- Used in good faith. In certain circumstances under s 122.

Step 4: Apply to the Scenario

- If a small bakery starts using the name “McRonald’s” for sandwiches, it is likely infringing on the McDonald’s trade mark due to deceptive similarity.

- However, if “McRonald’s” is a long-standing surname-based business in an unrelated field, it may argue that it used it in good faith as their name or the name of a predecessor in the business of the person.

Conclusion: A business can use a similar name without infringing if it operates in a different industry, uses the name descriptively, or uses it in good faith in certain circumstances. However, using a deceptively similar name for related goods/services is likely to be infringing under Australian trade mark law in relation to the same or similar goods and services for which the trade mark is registered.

This prompt provides structured examples to guide the model’s reasoning process. It breaks down legal analysis into logical steps, ensuring the model applies them effectively.

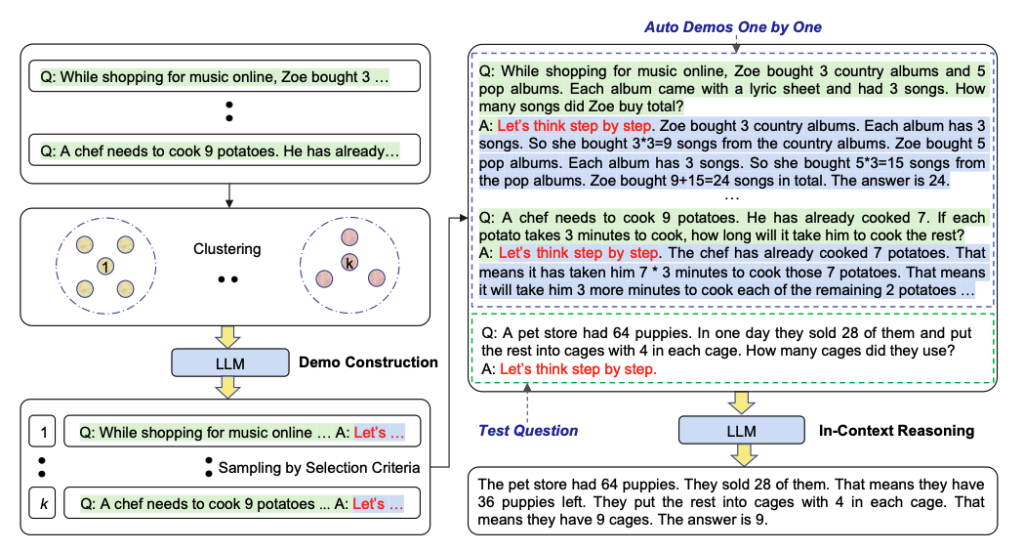

However, maintaining manual chain-of-thought prompting can be challenging and time consuming. Another approach Zhang et al. present is ‘automatic’ chain-of-thought.[9] Automatic chain-of-thought eliminates the need for manual effort by leveraging LLMs to generate reasoning chains. In the example below, the model is prompted with phrases like ‘Let’s think step by step, ‘ which enables it to produce diverse and effective reasoning paths for the task.

Studies have shown that Automatic chain-of-thought can match or exceed the performance of manually designed prompts across several reasoning benchmarks, making it a scalable and efficient approach for complex problem-solving.[10]

Under Australian trade mark law, can a small business use a name similar to a well-known registered trade mark without legal consequences?

Let’s think step by step.

The example is similar to a zero-shot chain-of-thought, but it utilises automation to generate reasoning structures more effectively. It is typically triggered with phrases like ”Let’s think step by step”.

This prompt does not provide explicit examples but instructs the model to generate logical reasoning chains autonomously, without pre-designed demonstrations.

Meta Prompting

Meta Prompting

Meta prompting is a technique for directing an LLM to approach or structure its responses at a higher level. This method moves away from example-based learning, such as few-shot and chain-of-thought. Zhang et al. describe how the approach utilises mathematical formalisms and structured representations to enhance the reasoning capabilities of LLMs beyond traditional prompting methods.[11]

This type of prompting prioritises the format and pattern of problems and solutions, using a structured, syntax-oriented approach.[12] Meta prompting, used for complex reasoning tasks, deconstructs intricate problems into simpler sub-problems. For example:

Problem Statement:

You are an AI legal assistant specialising in Australian law. Use the following structured reasoning process to analyse a legal issue. Ensure compliance with Australian legal principles, case law, and statutory interpretation.

Problem: Analyse whether the statement {{insert a statement}} made in an online forum constitutes defamation under Australian law.

Solution:

Step 1: Identify the relevant legal framework, including the Defamation Act 2005 (Cth) and applicable case law.

Step 2: Break down the key legal elements of defamation: (1) Publication, (2) Identification, (3) Defamatory meaning, (4) Lack of defence.

Step 3: Assess possible defences such as justification (truth), honest opinion, and qualified privilege.

Step 4: Provide a structured argument applying the elements to the given scenario, considering relevant precedents.

Step 5: Conclude with a risk assessment of potential legal liability and possible legal remedies.

Final Answer: A structured legal analysis assessing whether the statement constitutes defamation.

The advantages of meta prompting include reducing the need for extensive few-shot examples, resulting in more concise and scalable prompting. It operates independently of specific examples, ensuring a more neutral and unbiased problem-solving process.

Self-Consistency

Self-Consistency

As proposed by Wang et al., self-consistency seeks to enhance the effectiveness of chain-of-thought prompting for tasks related to arithmetic and commonsense reasoning.[13] The prompting builds on Chain-of-Thought by generating multiple independent chains of thought and then selecting the final answer based on consistency or majority agreement among the chains.

This approach can be especially valuable for complex problems in which multiple perspectives or methods may be valid. Here’s an example of utilising self-consistency prompting in a legal context:

Analyse the following excerpt from the Competition and Consumer Act 2010 (Cth) regarding misleading and deceptive conduct under Australian Consumer Law (ACL). Legislation Excerpt: Section 18: “A person must not, in trade or commerce, engage in conduct that is misleading or deceptive or is likely to mislead or deceive.”

Instructions: Generate three distinct and independent reasoning pathways to interpret and apply Section 18.

For each reasoning pathway, explicitly apply: (a) Statutory interpretation principles; (b) Relevant case law examples to illustrate and support your interpretation; and (c) Regulatory guidelines or commentary by the Australian Competition and Consumer Commission (ACCC) where relevant.

After generating these reasoning pathways, critically compare them to identify commonalities, differences, and points of legal divergence.

Determine and justify the most legally consistent interpretation of Section 18, based on agreement across your generated pathways. Clearly explain why this interpretation is preferred and how it aligns with authoritative sources.

This prompt enables the model to analyse the legislative extract using various legal reasoning methods. Furthermore, it seeks to ensure consistency by cross-validating different interpretations before drawing a conclusion.

Check out a ChatGPT (4o) response here: https://chatgpt.com/share/67e91043-73b4-800d-a465-abb0eee6ac5f

Generate Knowledge Prompting

Generate Knowledge Prompting

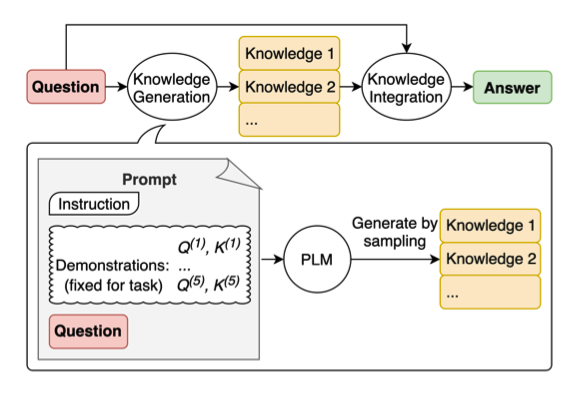

Following prompt chaining, generated knowledge prompting involves asking a model to first create background knowledge before posing a specific question. Rather than concentrating solely on the particular task, this technique encourages the model to integrate relevant background knowledge or context into its response analysis.

Liu et al. introduced this technique and found that it improved performance on commonsense reasoning tasks.[14] The structure of this approach is shown below.

For instance, before posing the question, “Does Australian Consumer Law apply to online marketplaces?” a model could first be prompted to outline the key provisions of the Competition and Consumer Act 2010 (Cth) along with relevant case law. This strategy enhances responses by encouraging the model to collect information and ‘think out loud’ rather than jumping straight to a conclusion.

Tree of Thought (ToT)

Tree of Thought (ToT)

Rather than following a single line of reasoning, ToT considers several possible approaches or interpretations. The model evaluates them and chooses the most promising paths for further development. This technique is especially beneficial for complex problems with multiple valid approaches, where the best solution may not be immediately apparent.

Yao et al. and Long proposed ToT and provided a visual example of its differences from other approaches.[15]

However, the literature around this approach is premised on making multiple calls or queries to an LLM. Comparatively, chain-of-thought prompting appears more advantageous because it can be achieved in ChatGPT.

Dave Hulbert proposed a single-sentence prompt that applies the Tree-of-Thought framework.[16] Hulbert proposed the following complex problem, which ChatGPT 3.5 struggled with:

Bob is in the living room.

He walks to the kitchen, carrying a cup.

He puts a ball in the cup and carries the cup to the bedroom.

He turns the cup upside down, then walks to the garden.

He puts the cup down in the garden, then walks to the garage.

Where is the ball?

The suggested ToT prompt was:

Imagine three different experts are answering this question.

All experts will write down 1 step of their thinking, then share it with the group.

Then all experts will go on to the next step, etc.

If any expert realises they’re wrong at any point, then they leave.

The question is…

See ChatGPT’s (4) response to the initial problem here: https://chatgpt.com/share/67db6b8f-c418-800d-af98-35a3763bad5c

Retrieval Augmented Generation (RAG)

Retrieval Augmented Generation (RAG)

Rather than depending exclusively on pre-trained knowledge, a model utilising RAG dynamically retrieves relevant information (for instance, from databases, legal texts, case law repositories, or government websites) before producing a response. RAG is accomplished by developing a system based on language models that taps into an authoritative knowledge base to perform tasks. This method can enhance accuracy and relevance, particularly when addressing specialised knowledge or up-to-date information.

RAG can be partially realised with tools like ChatGPT or Claude, combined with external information or data. Although these tools do not natively perform live retrieval from databases, users can manually incorporate retrieval steps into their workflow to imitate RAG.

Below is an example workflow of achieving RAG using ChatGPT for legal research.

- Use ChatGPT for Initial Context and Legal Framework

Start by asking ChatGPT to generate an overview of the legal topic. For example:

Provide a summary of misleading and deceptive conduct under Australian Consumer Law, including relevant sections of the Competition and Consumer Act 2010 (Cth).

2. Retrieve Up-to-Date Information from External Legal Sources

Use trusted legal research tools such as:

AustLII (http://www.austlii.edu.au/) – Case law & legislation.

Jade (https://jade.io/) – Recent court decisions.

3. Feed the Retrieved Data Back into ChatGPT for Analysis

Copy and paste relevant case law, legislation, or legal interpretations into ChatGPT. For example:

Here is a recent case summary from the Federal Court regarding misleading advertising. Analyse whether this case changes the interpretation of Section 18 of the ACL.

4. Generate AI-Assisted Legal Insights

ChatGPT can now interpret retrieved case law, compare it with existing legal principles, and assist in structuring legal arguments.

Example follow-up prompt:

Summarise the key differences between this case and the judgment in ACCC v TPG Internet Pty Ltd (2013) attached below.

How does it impact consumer law enforcement?

This workflow mimics RAG by:

Retrieval: The user pulls real-time legal data from official legal sources.

Augmentation: ChatGPT integrates this external knowledge into its reasoning.

Generation: The model provides legal analysis, comparisons, and structured insights.

Automatic Reasoning and Tool-use (ART)

Automatic Reasoning and Tool-use (ART)

Tools are functions that a generative AI system uses to interact with the external world. For example, within ChatGPT, the system has a feature that enables it to search the web when generating outputs. Models can also integrate with application programming interfaces (APIs) to retrieve information. Using this technique, the model is prompted to consider which tools or functions may help solve a problem, when to use them, and how to interpret their results. Researchers have developed training models to determine which tools to use, when to use them, and how to incorporate the results effectively.[17]

This technique is particularly valuable in complex analyses that frequently require external information. The model independently determines which tools to utilise and how to integrate their outputs to draw meaningful conclusions. The ART approach illustrates how models can:

However, this technique depends on a user’s comfort with AI-assisted workflows. Unlike manual prompting, tool-use prompting involves using/integrating external databases, APIs, or other legal tech solutions.

However, ART prompting can be augmented using systems like ChatGPT. You can combine structured prompts with external tools (such as legal research databases or other search engines and databases).

To use ART prompting effectively with a model like ChatGPT, you can combine structured prompts with external tools to enhance the effectiveness of the model. Below is an example workflow.

Step 1: Define the Task Clearly

Before prompting, determine the specific legal question, research objective, or document analysis task that needs to be addressed. For example:

I need to determine whether a new business name infringes on a registered trade mark under Australian law. Let’s think step by step. Also, suggest external tools that can verify trade mark registration and case law references.

Step 2: Leverage Automatic Reasoning (Self-Generated Logical Steps)

ChatGPT will automatically generate a structured legal analysis by breaking the issue into key components (i.e., chain-of-thought).

Step 3: Use Tool-Use Prompting to Enhance Accuracy

Once the model outlines legal reasoning, conduct external verification by integrating legal research tools. In this example, ChatGPT suggested external tools such as IP Australia’s Australian Trade Mark Search System (https://search.ipaustralia.gov.au/trademarks/) and the ASIC Business Name Register (https://connectonline.asic.gov.au/RegistrySearch/faces/landing/bn/SearchBnRegisters.jspx)

Step 4: Request the model to interpret External Tool Results

Once you gather the information from an external tool, you can paste it into ChatGPT for further analysis and processing. For example:

I searched for ‘GreenTech Solutions’ in the IP Australia database and found the word registered under Class 42 for ‘environmental consulting services’. My business is a renewable energy supplier.

Thinking step by step, does this pose a risk of trade mark infringement?

Automatic Prompt Engineer (APE)

Automatic Prompt Engineer (APE)

APE is a method in which a model generates prompts. Rather than manually requiring a user to craft a well-structured prompt, the model can be requested to experiment with different phrasing, structures, and variations to discover the most effective way to elicit high-quality outputs. This technique is particularly beneficial when you are unsure of where to begin and can help build upon or refine your prompts.

For instance, if a lawyer needs to analyse a recent High Court judgment, an APE approach could generate multiple versions of a legal research prompt, test which one produces the most relevant case law insights, and then refine it automatically. Instead of a prompt with “Summarise the High Court ruling in Mabo v Queensland (No 2)”, an alternative approach could be:

What is the most effective way to prompt an AI to generate a legally accurate summary of Mabo v Queensland (No 2)? Generate multiple variations of a legal research prompt that could yield the most accurate and comprehensive response. Then, evaluate and refine the best version based on clarity, specificity, and legal relevance.

See ChatGPT’s (4o) response to the initial problem here: https://chatgpt.com/share/67db7299-baa8-800d-8f60-ec9748805836

Zhou et al. introduced the method whereby an LLM was used to (i) generate proposed prompts, (ii) score them, and (iii) suggest similar prompts to the highest-scored one.[18]

Using this technique, the researchers were able to find a more effective chain of thought prompt than the one suggested by Kojima et al., namely, ‘Let’s think step by step’.[19]

Multimodal Chain-of-Thought (MCoT) Prompting

Multimodal Chain-of-Thought (MCoT) Prompting

MCoT prompting is a technique that combines visual and textual inputs to guide language models through complex reasoning tasks. Incorporating multiple data types includes text, images, documents, charts, or legal statutes

Zhang et al. proposed the approach as an extension of traditional chain-of-thought prompting by incorporating multiple modalities, such as images, diagrams, or charts, alongside text.[20] This approach enables the model to utilise both visual and textual information during its reasoning process. The model is encouraged to express its thoughts step-by-step while referencing and analysing both visual and textual elements.

For example, if a user prompted:

Does this product’s packaging infringe on a registered trade mark under the Australian Trade Marks Act 1995 (Cth)?

An MCoT prompt would direct the model to first extract key elements from an uploaded image, compare them with trade mark descriptions, and then reason through legal infringement analysis.

Remember, this only works with models that allow inputting file types other than text.

Verification prompting

Verification prompting

Verification prompting enhances the reliability of language model outputs by incorporating self-verification steps into the prompt. This approach requires the model to verify its own work, validate its assumptions, and confirm its conclusions before providing a final response. Integrating verification steps directly into the prompt structure helps reduce errors and increases the model’s accuracy. For example, in a legal context:

A website in Australia republishes excerpts from a news article without permission. Does this constitute copyright infringement under the Copyright Act 1968 (Cth), or could it qualify as fair dealing?

First, generate an initial legal analysis based on the relevant provisions of the Copyright Act 1968 (Cth), particularly sections related to copyright infringement and fair dealing exceptions.

Next, critically evaluate your response by checking for any gaps in statutory interpretation, ambiguities, or missing considerations (e.g., the purpose of reproduction, the amount copied, and potential market harm).

Then, cross-reference relevant case law (e.g., Australian decisions on copyright and fair dealing in news reporting or online content).

Finally, refine and verify your final answer, ensuring that it aligns with both statutory law and judicial precedent before concluding whether the website’s actions are lawful or infringing.

This example illustrates how verification prompting introduces additional validation layers to the analysis process. Unlike straightforward prompting techniques, verification prompting requires the model to explicitly check its work against established principles, assign confidence levels, and validate conclusions.

Take a deep breath

Take a deep breath

This approach aims to reduce rushed responses and potential errors by encouraging more deliberate and thorough analysis. Yang et al. introduced the technique by introducing phrases like “Take a deep breath” or “Let’s approach this step by step” in the prompt, signalling the model to slow down its reasoning process.[21]

For example:

Does the Copyright Act 1968 (Cth) allow an Australian university to use copyrighted materials for educational purposes without obtaining permission from the copyright holder?

Take a deep breath and analyse this step by step:

- Identify the relevant statutory provisions that govern educational use under Australian copyright law.

- Explain any applicable exceptions, such as fair dealing for education or statutory licensing schemes.

- Consider relevant case law or legal interpretations that clarify how these provisions have been applied in practice.

- Conclude whether the university can use the material without permission, taking into account any limitations or conditions.

This example illustrates how prompting with “take a deep breath” encourages more thoughtful and systematic analysis. Unlike rapid response prompting, this approach allows for intentional pauses that encourage reflection and verification.

Take a step back

Take a step back

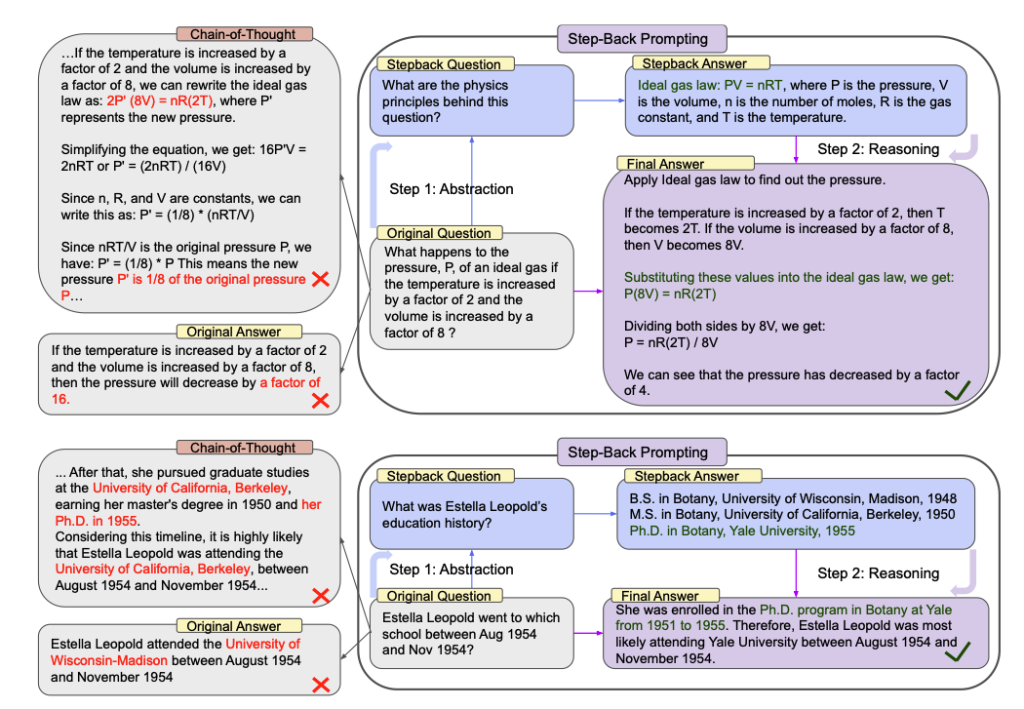

Advancing on the ‘take a deep breath’ prompting technique, researchers at Google DeepMind introduced the “Take a Step Back” prompting technique.[22] This technique encourages language models to zoom out from immediate details and consider the broader context or implications before diving into specific analysis. This approach helps prevent tunnel vision and ensures that important contextual factors are not overlooked. The technique typically involves asking the model to consider the larger picture, underlying principles, or broader implications before proceeding with detailed analysis.

The researchers explain that the technique consists of two steps:[23]

- Abstraction: Instead of addressing the question directly, we first prompt the LLM to ask a generic step-back question about a higher-level concept or principle, and retrieve relevant facts about the high-level concept or principle. The step-back question is unique for each task in order to retrieve the most relevant facts.

- Reasoning: Grounded on the facts regarding the high-level concept or principle, the LLM can reason about the solution to the original question.

The authors provide the example of instead of directly asking, “Which school Estella Leopold went to between August 1954 and November 1954?” A step-back question would instead focus on the “education history”, which is a broader concept that encompasses the original question.

Custom Instructions

Custom Instructions

Custom instructions in AI tools like ChatGPT and Claude’s personalisation features enable users to tailor their interactions by specifying preferences for response behaviour and style. They each function as customisable system prompts. Once established, these settings transfer to new conversations. In ChatGPT, users can provide details about themselves and their preferred response style, allowing the AI to adjust its outputs accordingly. Similarly, Claude offers personalisation through profile preferences, styles, and project instructions, enabling users to define general settings, adjust communication tones, and establish project-specific guidelines. These features enhance user experience by aligning AI responses with individual needs and contexts.

- See Jason Wei et al., ‘Finetuned language models are zero-shot learners’ (2021) arXiv:2109.01652 (preprint). The authors demonstrated that models fine-tuned with instructional tuning (i.e., fine-tuning models on datasets described via instructions) ↵

- Tom Brown et al., ‘Language models are few-shot learners’ (2020) 33 Advances in neural information processing systems 1877-1901. ↵

- Sewon Min et al. ‘Rethinking the role of demonstrations: What makes in-context learning work?’ (2020) arXiv:2202.12837 (preprint). ↵

- Anthropic, ‘Chain complex prompts for stronger performance’, User Guide (Web Page) <https://docs.anthropic.com/en/docs/build-with-claude/prompt-engineering/chain-prompts>. ↵

- Ibid. ↵

- Jason Wei et al., ‘Chain-of-thought prompting elicits reasoning in large language models.’ (2022) 35 Advances in neural information processing systems24824. ↵

- Fangyi Yu, Quartey Lee and Frank Schilder, ‘Legal prompting: Teaching a language model to think like a lawyer’ (2022) arXiv:2212.01326 (preprint). ↵

- Zhuosheng Zhang et al., ‘Automatic chain of thought prompting in large language models’ (2022) arXiv:2210.03493 (preprint). ↵

- Ibid. ↵

- Ibid. ↵

- Yifan Zhang, Yang Yuan, and Andrew Chi-Chih Yao, ‘Meta prompting for AI systems’ (2023) arXiv:2311.11482 (preprint). ↵

- Ibid. ↵

- Xuezhi Wang et al., ‘Self-consistency improves chain of thought reasoning in language models’ (2022) arXiv:2203.11171 (preprint). ↵

- Jiacheng Liu et al.,’ Generated knowledge prompting for commonsense reasoning’ (2021) arXiv:2110.08387 (preprint). ↵

- Shunyu Yao et al., ‘Tree of thoughts: Deliberate problem solving with large language models’ (2023) 36 Advances in neural information processing systems11809-11822 and Jieyi Long, ‘Large language model guided tree-of-thought’ (2023) arXiv:2305.08291 (preprint). ↵

- Dave Hulbert, ‘Tree-of-thought prompting’ GitHub (Web Page, 2023) <https://github.com/dave1010/tree-of-thought-prompting>. ↵

- Timo Schick et al., ‘Toolformer: Language models can teach themselves to use tools’ (2023) 36 Advances in Neural Information Processing Systems 68539. ↵

- Yongchao Zhou et al., ‘Large language models are human-level prompt engineers’ (Conference Paper,The Eleventh International Conference on Learning Representations, November 2022). ↵

- Takeshi Kojima et al., ‘Large language models are zero-shot reasoners’ (2022) 35 Advances in neural information processing systems 22199. ↵

- Zhuosheng Zhang et al., ‘Multimodal chain-of-thought reasoning in language models’ (2023) arXiv:2302.00923 (preprint). ↵

- Chengrun Yang et al., ‘Large language models as optimizers’ (2023) arXiv:2309.03409 (preprint). ↵

- Huaixiu Steven Zheng et al., ‘Take a step back: Evoking reasoning via abstraction in large language models.’ (2023) arXiv:2310.06117 (preprint). ↵

- Ibid 3. ↵