2 What is Artificial Intelligence?

Artificial intelligence (AI) is a broad term encompassing many technology solutions. In a submission to the Australian Senate Select Committee on Adopting Artificial Intelligence, Xaana.ai noted:

…the term “artificial intelligence” (AI) has become a buzzword, encompassing a vast and often ambiguous range of technologies. What was once described as “big data” or “predictive analytics” can now be readily rebranded as AI, b[l]urring the lines between distinct concepts. Additionally, confusion arises from the tendency to conflate AI with automation.[1]

At its core, AI refers to computer systems designed to perform tasks that typically require human intelligence. These tasks can include recognising patterns, understanding language, solving problems, and making decisions. The field emerged in the 1950s when pioneering computer scientists began exploring whether machines could simulate human thought processes and behaviours.

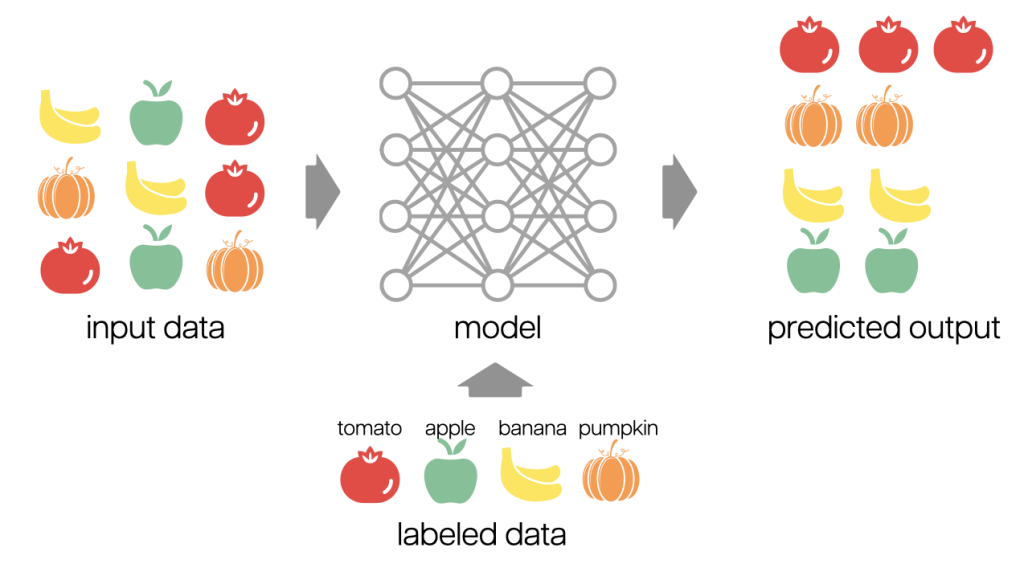

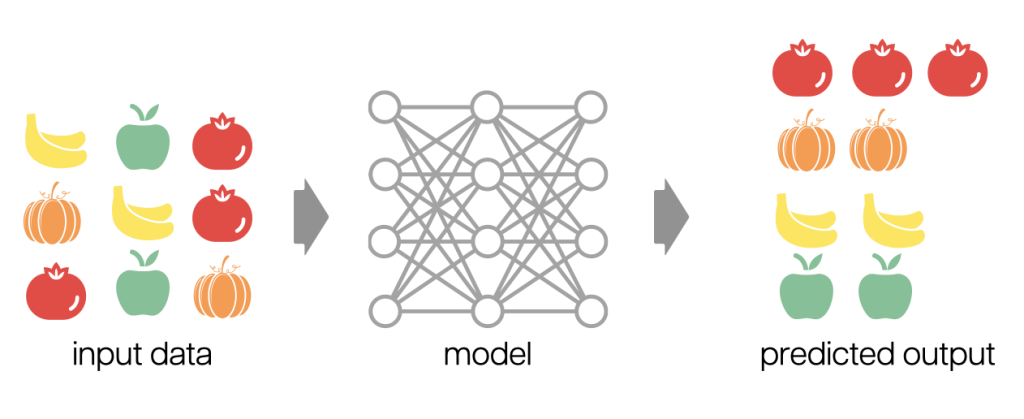

Modern AI’s mechanisms predominantly rely on machine learning, particularly deep learning models, trained on vast datasets. These systems do not follow explicitly programmed rules; instead, they develop statistical frameworks to accomplish specific tasks. The performance of this technology is improved through data exposure. These systems analyse vast amounts of information to identify patterns and relationships, allowing them to make predictions or take actions based on new inputs.

For instance, an AI system might learn to recognise cats in photographs by analysing millions of labelled images of cats, gradually refining its understanding of feline characteristics.

AI can be broadly divided into narrow AI and artificial general intelligence (AGI). Narrow AI refers to current AI applications that excel at specific tasks within well-defined parameters. Examples include virtual assistants that understand speech, algorithms that recommend products based on past behaviour, and systems that detect fraudulent transactions. AGI, meanwhile, remains a theoretical goal for AI that could match or exceed human-level intelligence.

Humans vs. AI: Who should make the decision?

Humans vs. AI: Who should make the decision?

AI is a powerful tool, but can it surpass humans’ ability to make decisions and problem solve? In this video, Martin Keen delves into the strengths and weaknesses of each.

Types of Artificial Intelligence

Artificial Intelligence can be viewed through two main lenses: its capabilities and its functions.[2] This classification system outlines seven distinct types of AI, ranging from existing technologies to theoretical future developments.

Watch

Watch

Watch the following video that discusses each type of AI.

The table below organises the seven types across the two lenses. Under capabilities, we see the progression from today’s Narrow AI to theoretical Super AI, representing increasing levels of autonomy and sophistication. Under functionalities, we explore how AI operates, from simple reactive systems to theoretical self-aware entities.

| Capabilities | |||

| Functionalities |

Narrow AI Can perform specific tasks within defined parameters but requires human training. May excel at tasks beyond human capability within its narrow domain. |

Artificial General Intelligence Can apply previous learnings to new tasks in different contexts without human training. Capable of self-directed learning. |

Super AI Would surpass human cognitive abilities, developing its own needs, beliefs, and desires. It would evolve beyond catering to human experiences. |

|

Reactive Machine AI Performs specific specialised tasks using statistical mathematics to analyse data |

Theory of Mind AI Would understand human thoughts, emotions, motives and reasoning. Could personalise interactions based on individual emotional needs. |

Self-Aware AI Would understand its internal conditions, developing its own emotions, needs and beliefs. Most advanced theoretical forms of AI. |

|

|

Limited Memory AI Can recall past events and use historical and present data to make decisions. Improves with more training data. |

|||

Expert Systems and Knowledge-Based Approaches

Early examples of AI systems include expert systems, which emerged in the 1970s and continue to provide value today. These systems encode human expertise into rules and facts, allowing them to make decisions or provide recommendations within specific domains. For example, a legal expert system might contain thousands of tax rules and regulations.

The core components of an expert system comprise a knowledge base (containing domain-specific rules and facts), an inference engine (which applies these rules to new situations), and a user interface. Although somewhat inflexible compared to contemporary machine learning methods, expert systems thrive in scenarios where clear rules are present and transparency in decision-making is essential.

History of AI

History of AI

The history of artificial intelligence began in the mid-20th century, though its philosophical foundations reach back to ancient civilisations that contemplated the nature of human thought and reasoning. The field emerged in 1956 at the Dartmouth Conference, where prominent scientists, including John McCarthy, Marvin Minsky, and Claude Shannon, first coined the term and established it as a distinct academic domain discipline.

Watch

Watch

Watch this brief video that covers the history of the quest to build a machine that thinks like a human.

The early years of AI were characterised by immense optimism. Researchers developed programs that could solve mathematical problems, engage in basic conversations, and even play games like chess. However, the 1970s saw the advent of what became known as the first “AI winter,” as early promises failed to materialise and funding dwindled. The field underwent a revival in the 1980s with the rise of expert systems—programs created to tackle complex problems by emulating human expertise—though this, too, was followed by another phase of diminished funding interest.

The modern era of AI began to take shape in the late 1990s, marked by IBM’s Deep Blue defeating chess champion Garry Kasparov in 1997. However, the field’s true transformation accelerated in the early 2000s, driven by three key developments: the availability of massive amounts of data, significant advancements in computing power, and breakthroughs in machine learning algorithms, particularly deep learning. This convergence led to remarkable achievements in computer vision, natural language processing, and game playing.

AlphaGo

AlphaGo

Notable milestones include IBM Watson’s victory at Jeopardy! in 2011 and DeepMind’s AlphaGo defeating Go champion Lee Sedol in 2016. Watch the film below that recounts the story leading up to the seven-day tournament in Seoul.

In recent years, we have witnessed unprecedented advances in AI capabilities, particularly in language models and multimodal systems that simultaneously process text, images, and other forms of data. Since the early 2010s, the trajectory of AI research and development has undergone a significant transformation, marking a departure from previous paradigms in computational reasoning and machine learning. This shift was precipitated by the convergence of unprecedented computational resources, vast datasets, and revolutionary architectures in deep learning, particularly the emergence of transformer models that would fundamentally alter the landscape of natural language processing.

Between 2012 and 2018, several pivotal developments reshaped the scholarly understanding of AI’s capabilities. The breakthrough performance of AlexNet in the 2012 ImageNet competition demonstrated the viability of deep convolutional neural networks for complex visual recognition tasks. The introduction of the transformer architecture through the seminal “Attention Is All You Need” paper in 2017 then set new benchmarks in sequence modelling and translation. These advances were complemented by significant achievements in reinforcement learning, exemplified by DeepMind’s AlphaGo victory over Lee Sedol in 2016, highlighting the potential for AI systems to master complex strategic domains.

The latter part of the decade, from 2019 onwards, has been characterised by the rapid evolution of LLMs and the emergence of multimodal architectures. The development of sophisticated pre-trained models, starting with BERT and GPT, has led to systems demonstrating unprecedented capabilities in natural language understanding and generation. This progression culminated in the deployment of models exhibiting remarkable proficiency across diverse tasks, from code generation to creative writing.

Explore

Explore

For a brief overview, explore this interactive timeline.

Watch

Watch

For those who would like a deeper dive into the history of AI, watch the following lecture by Professor Harry Surden, who provides an overview and history of AI in law.

AI, Machine Learning, Deep Learning and Generative AI Explained

AI, Machine Learning, Deep Learning and Generative AI Explained

AI encompasses several interrelated but distinct concepts (often used interchangeably). Understanding the relationship between AI, machine learning, deep learning, and generative AI is essential for comprehending how these technologies operate and their implications for society. These terms represent different layers of technological capability, each building upon the other to create increasingly sophisticated systems.

Watch

Watch

Watch the following video where Jeff Crume discusses the distinctions between Artificial Intelligence (AI), Machine Learning (ML), Deep Learning (DL), and Foundation Models and how these technologies have evolved.

Explore

Explore

The following diagram best illustrates the interrelationship of these concepts. Explore each concept that falls within a broader category; for example, deep learning is a subset of machine learning, which is a subset of artificial intelligence. At the same time, generative AI represents a powerful application of these underlying technologies.

Machine Learning

Machine Learning

Remember, AI broadly refers to the use of computer systems that mimic human problem-solving or decision-making. Artificial Intelligence encompasses various technical approaches and methodologies, with machine learning as one of its primary branches.

Machine learning is a subset of AI that employs various self-learning algorithms to derive knowledge from data and predict outcomes. It represents a fundamental shift from traditional rule-based programming, enabling systems to learn from data without explicit programming. Within machine learning, we find several approaches, including supervised learning (where systems learn from labelled data), unsupervised learning (where systems identify patterns in unlabelled data), and reinforcement learning (where systems learn through trial and error with feedback). Neural networks, inspired by the structure of biological brains, represent one specific type of machine learning architecture that has proven particularly effective for complex tasks.

On the other hand, deep learning is a specialised subset of neural networks that utilises multiple layers (hence “deep”) to process information with increasing levels of abstraction. These deep neural networks have transformed AI by enabling revolutionary performance in computer vision, natural language processing, and speech recognition.

Watch

Watch

Watch the following video, which visually explains what Machine Learning is, how it compares to AI, and deep learning.

The relationship among these concepts can be viewed as a hierarchy: deep learning is a specific type of neural network, which is a category of machine learning approaches that fall under the broader umbrella of AI. Each successive level signifies a more specialised and sophisticated method of processing information and learning from data, with deep learning currently regarded as one of the most powerful and widely employed techniques in contemporary AI applications.

Generative AI

Generative AI

Generative AI is an approach to artificial intelligence that focuses on creating new content rather than simply analysing existing data. Systems trained on vast amounts of data can generate novel text, images, music, code, and other forms of content that exhibit characteristics similar to their training data while producing original outputs.

Several key architectures are at the technical core of generative AI, with transformer models playing a crucial role in recent advancements. These models utilise attention mechanisms to comprehend complex patterns in data and generate contextually relevant content.[3]

LLMs exemplify this approach, expertly processing and generating text with impressive fluency and contextual understanding. In the visual domain, models have enabled unprecedented image generation capabilities, producing highly realistic images from text descriptions or transforming existing images in complex ways.

Watch

Watch

So, what is the difference between AI and generative AI? Watch this video that explains the differences.

- Xaana.Ai, Submission No 167 to Australian Senate Select Committee on Adopting Artificial Intelligence, Final Report (November 2024) 5. ↵

- IBM Technology, ‘The 7 Types of AI - And Why We Talk (Mostly) About 3 of Them’ (YouTube, 11 November 2023) <https://www.youtube.com/watch?v=XFZ-rQ8eeR8>. ↵

- See Vaswani, Ashish, et al. ‘Attention is all you need’ (2017) Advances in neural information processing systems 30. ↵