16 Fundamentals to Prompting

Many of us have engaged with LLMs through tools like OpenAI’s ChatGPT. Numerous models are accessible via such a GUI. A prompt is a text input that initiates a conversation or triggers a response from the model. The user enters an inquiry, and the model responds. The prompt can include a range of questions, from simple questions (e.g., “Who is the prime minister of Australia?”) to complex problems. A prompt may also appear ambiguous, such as “Please share a joke, as I am feeling a bit down.” In some systems, prompting can involve uploading an image, a text/data file, or interacting with the model through audio input.

Prompting a Model

Remember, LLMs learn patterns from their training data to predict which words should come next in a sequence. When we write prompts, we can leverage this pattern-matching ability in three key ways: we can tap into existing strong patterns (like “Mary had a little…{finish the sentence}”), deliberately avoid them for more creative responses (like…{insert}), or create new patterns to shape the model’s output format (i.e., give me a summary and use the following headings {insert}). The more specific and detailed our prompts are, the more precise the responses will be – generic questions typically lead to generic answers. This means that effective prompting requires carefully selecting words and structures to guide the model toward the desired response.

Watch

Watch

Prompt Design

Prompt Design

Prompt design is the process of carefully crafting the phrasing, structure, and content of prompts to elicit high-quality, relevant, and coherent responses from the model. It involves understanding the model’s capabilities, user intent, domain knowledge and the context in which it operates.

LLMs cannot read your mind, so prompt design focuses on crafting, iterating, refining, and optimising prompts. A well-crafted prompt is clear, concise, and structured to guide the model in generating accurate and relevant outputs. Importantly, this process is iterative and often requires experimentation with wording, formatting, and constraints to refine how a model interprets and responds to queries.

These models are complex systems trained on vast amounts of data, so it is crucial to remember these features of language models.

- Pattern Recognition: These models do not truly understand language; they recognise patterns in text and generate responses based on statistical probabilities.

- Context Sensitivity: These models consider the entire context of a prompt rather than just individual words. The sequence and wording of information can influence the output.

- Token Limitation: Most models can process a maximum number of tokens (roughly equivalent to words or parts of words) in a single interaction. For complex tasks, this may require dividing problems into smaller components.

- Knowledge Cut-off: Models have a knowledge cut-off date, after which they are no longer able to provide information about current events or developments. Therefore, verifying information is essential.

- Lack of Real-World Understanding: Although these models can generate text that appears to demonstrate understanding, they lack proper comprehension and reasoning abilities.[1]

Effective prompts are crucial in legal practice to ensure that AI-generated content is accurate, relevant, and tailored to the specific needs and objectives of a particular task or matter. Well-crafted prompts can effectively guide the AI to produce content grounded in relevant information.

Ultimately, prompt design in legal practice aims to harness the power and efficiency of generative AI while maintaining the necessary level of human judgment, control, and accountability. By carefully designing and refining prompts, lawyers can guide models to produce high-quality, relevant, and ethically sound content that supports and enhances their legal work.

This section of the book presents strategies for improving results when using LLMs. A key aspect of utilising a model is experimentation. Try these strategies, use multiple models to determine if you receive different results, and consider combining these strategies to identify what works best. As you develop skills in engaging with LLMs, you will discover what is most effective for you and the tasks you assign with any of these tools.

What is a Prompt?

A prompt is like conversing with an AI; it is how we request it to write stories, answer questions, complete tasks, or solve problems. It is a call to action that activates the model to generate output. Simple prompts can accomplish a lot, but the quality of the results depends on the amount of information you provide and how the prompt is structured and crafted.

For example:

Can you tell me the colour of the ocean?

ChatGPT-4o Response:

Yes! The colour of the ocean varies depending on different factors, such as the depth, the presence of particles, and how sunlight interacts with the water.

- Deep Open Ocean: Appears deep blue because water absorbs colours in the red part of the light spectrum and scatters blue light.

- Shallow Waters: Can look turquoise or green due to reflections from the seafloor and the presence of algae or sediments.

- Near Coastlines: Often appears green, brown, or even murky due to sand, mud, or organic material mixing with the water.

- Sunset or Sunrise Effects: The ocean may reflect hues of orange, pink, or purple depending on the sky.

So, while the ocean is often considered blue, its colour changes based on lighting, location, and contents! 🌊💙

The elements of a prompt include a call to action, such as an instruction or question, which should be present to guide the model’s response. In addition to asking a simple question, provide instructions on how the model should answer it, which can enhance the prompt.

For example, compare the answer when asking ChatGPT (4o) the simple question: “What colour is the sky?” to one including more instructions in the following prompt:

What colour is the sky? Please answer as if you were explaining it to someone with little background in science: provide a clear, concise statement about the sky’s colour, include at least two descriptive adjectives, and briefly explain why the sky appears that way.

Check out ChatGPT (4o) response here: https://chatgpt.com/share/67cfc751-f538-800d-aba7-54fd5410a235

Additional elements, like context, input data, or examples, may also be included. You can mix these elements to guide the model’s response. The quality and structure of a prompt can significantly influence the model’s results. For example:

You are an educator explaining why the sky appears blue. A student has asked this question, and they have no prior knowledge of physics. Here is an example of how a similar question was answered: ‘The ocean looks blue because water absorbs red and green light more than blue light, which gets scattered.’

Using a similar approach, explain why the sky is blue in a clear and simple way.

In this example:

- Context: The model is assigned the role of an educator.

- Input data: A scenario is given (a student asking without prior physics knowledge).

- Example: A reference answer (about the ocean) is provided to shape the response style.

Check out ChatGPT (4o) response here: https://chatgpt.com/share/67cfcc00-5eb8-800d-884f-368e37392c10

Let’s now use a legal example:

You are an Australian intellectual property lawyer providing guidance to junior solicitors on trade mark registration.

A junior solicitor has asked about the registrability of the name ‘CoolFizz’ for a soft drink. Explain whether this trade mark is likely to be accepted under Australian trade mark law, considering the distinctiveness requirement in Trade Marks Act 1995 (Cth), s 41. Use relevant case law, such as Cantarella Bros Pty Ltd v Modena Trading Pty Ltd (2014) 254 CLR 337, to support your answer.

Provide a clear and concise response in no more than 200 words.

The prompt is clear, practical, and concise while using a mix of elements to guide the response:

- Instruction: “Explain whether this trade mark is likely to be accepted… Provide a clear and concise response in no more than 200 words.”

- Context: The model is assigned the role of an Australian intellectual property lawyer.

- Input Data: The legal issue (distinctiveness under s 41), a real-world scenario, and a key case (Cantarella).

Check out ChatGPT (4o) response here: https://chatgpt.com/share/67cfcf5d-a364-800d-a26f-6e66b87cfef0

Prompts can operate across different temporal dimensions. They can request immediate responses (like “Who is the current Australian Prime Minister?”) or establish ongoing instructions that impact future interactions, which requires the model to retain contextual memory, like “Always explain your answers” or “You are an Australian lawyer” (See more about prompt chaining below). Prompts can also instruct the model to ask the user questions, creating a two-way conversation where both sides share information.

Immediate:

You are an Australian legal assistant helping a law firm track developments in intellectual property law. Provide a summary of the most recent High Court decision related to trade mark distinctiveness in Australia.

+Ongoing instruction:

In all future responses, ensure that your explanations are concise, legally accurate, and reference key case law where relevant.

+Two-way interaction:

Before proceeding, ask me what I already know.

Prompts also work best as conversations rather than one-off interactions. Just as a sculptor continuously refines their work, successful prompting requires iterative refinement through dialogue. When utilising models for complex tasks, each response shapes the subsequent question or instruction, enabling you to:

- Navigate around limitations (if one approach fails, try another approach)

- Build upon previous responses to reach your goal

- Refine outputs until they meet your needs

- Extract additional value through follow-up questions or summaries

This conversational approach can help achieve better results than crafting a single perfect prompt. However, context limits must be kept in mind (most tools will warn you if you are reaching a limit). The technique, called Prompt Chaining, is described below and captures more information about this approach.

Context

Context

LLMs respond best to clear and direct instructions. Anthropic have a great analogy,

Think of an LLM like any other human that is new to the job. [The model] has no context on what to do aside from what you literally tell it. Just as when you instruct a human for the first time on a task, the more you explain exactly what you want in a straightforward manner, the better and more accurate the response will be.[2]

Effective prompts, therefore, need to be clear, specific, and provide sufficient context for the model to grasp the desired outcome. For example, instead of “Write about dogs,” a better prompt might be “Write a 300-word explanation of how to train a puppy, focusing on house training and basic commands, using positive reinforcement techniques.”

A good example to demonstrate this point is what happens when prompting a model with:

Who is the best football player of all time?

For the Australian audience, you will notice that the response centres around soccer. The model does not recognise that we may have meant Australian rules football or even American football.

You can see ChatGPT’s (4o) response here: https://chatgpt.com/share/67d003f6-035c-800d-8084-3c5e6996dc67

Consequently, context is essential, as is clarity and directness. Anthropic has a golden rule regarding prompting in this regard.

“Show your prompt to a colleague or friend and have them follow the instructions themselves to see if they can produce the result you want. If they’re confused, [the model is] confused.”[3]

If we want a more direct answer, we can get it to make up its mind and decide on the best player. For example:

Who is the best Australian AFL football player of all time?

Yes, there are differing opinions, but if you absolutely had to pick one player, who would it be?

You can see ChatGPT’s (4o) pick here: https://chatgpt.com/share/67d005a4-9190-800d-848c-c6d996cc91c2

Context is crucial for LLMs as they predict the most likely following tokens based on patterns learned from their training data and the current conversation history. Context can take the form of:

- Background information

- Specific instructions and constraints

- Examples

- Previous messages in the conversation

- Relevant input data or files shared

A well-crafted prompt with appropriate context helps the model generate more accurate, relevant, and useful outputs by giving it the necessary information and guidance to pattern-match effectively against its training data. This matters because language models:

- Do not have persistent memory.[4] They rely on the context window to understand the current conversation. Moreover, they can only consider a finite amount of previous text when generating responses

- Process text as token sequences. Having a clear context helps them generate more coherent and relevant token predictions

- Learn through pattern recognition. Providing examples in context helps them better match desired output patterns

Different Model Preferences

Different models have distinct response preferences and capabilities that affect how they process and respond to prompts. Understanding these differences enables more effective prompting across various platforms. When working across multiple platforms, consider adapting your prompting style to match each model’s strengths and memory capabilities for optimal results.

Models like ChatGPT tend to provide more conversational responses, while Claude often delivers more structured, analytical outputs. Some models excel at step-by-step reasoning when explicitly prompted (see Prompt Engineering for more information), while others do not need explicity prompting to do so (see Prompting a Reasoning Model for more information). The latter perform better with high-level instructions.

Beyond the immediate context window, some models incorporate memory systems that extend beyond the current conversation. For instance, ChatGPT’s memory feature can retain information across separate conversations,[5] while Claude’s context-aware systems can reference patterns from previous interactions within extended sessions. These capabilities mean that your prompting strategy should account for how each model handles contextual information both within and beyond the immediate conversation.

Personas

Present in a few prompts so far is setting a persona. Asking the model to inhabit a specific role, for example, “You are an Australian lawyer,” is known as role prompting. The more details about the role context, the better. From a previous example, “You are an Australian legal assistant helping a law firm track developments in intellectual property law.”

To best illustrate this, let us look at an example without role prompting:

Explain ratio decidendi in one sentence.

It returns:

Ratio decidendi is the legal principle or reasoning essential to a court’s decision, which serves as a binding precedent for future cases.

Now, assign the role of a cat with:

You are a cat. In one sentence, what do you think about ratio decidendi?

See ChatGPT’s (4o) response here: https://chatgpt.com/share/67d00a84-36a4-800d-ae01-2eac012af23c

Role prompting is a helpful tool when guiding an LLM’s response. You can get a model to emulate a specific writing style, adopt a particular tone, or perform better at logic or math tasks.

Documents and text as context

Language models can be powerful tools when provided with clear instructions and relevant context in the prompt. Moreover, by including specific information (e.g., case details, contract language, legislative provisions) in your prompt, you can also enable a model to reason about information not in its training data. This allows for more precise and relevant analysis, much like briefing a colleague on case specifics before seeking their input.

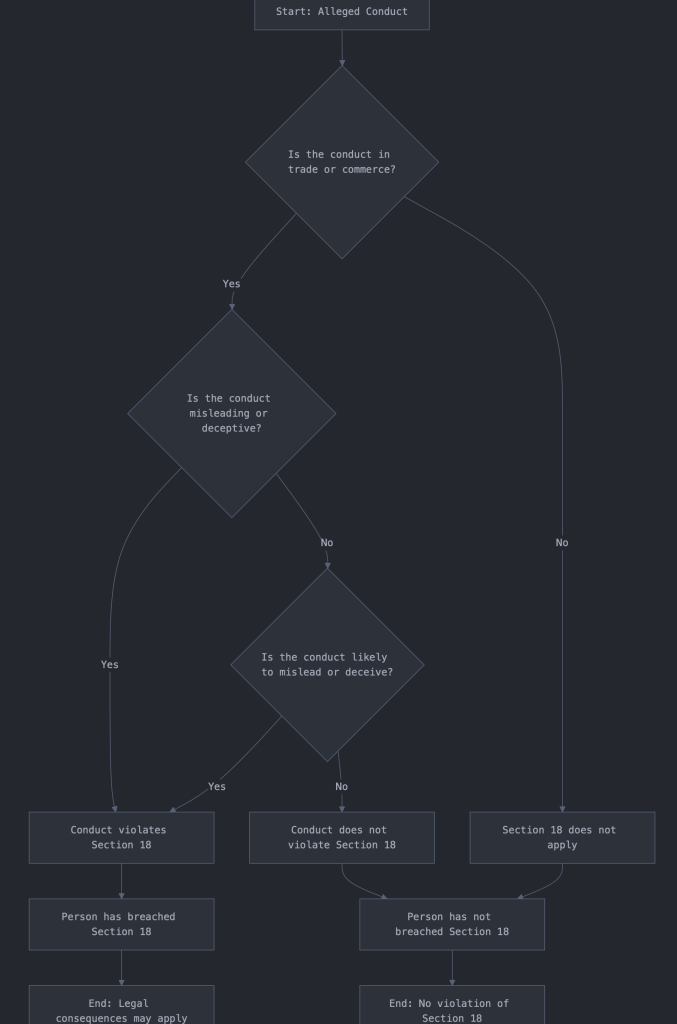

For instance, you could paste sections of a new regulation and then request an analysis of how it operates (or even create a flowchart). For example, consider the prohibition on misleading conduct as outlined in section 18(1) of the Australian Consumer Law, which states, “A person must not, in trade or commerce, engage in conduct that is misleading or deceptive or is likely to mislead or deceive.”

We can put that in a prompt to understand the logic underpinning the prohibition:

The following section from the Australian Consumer Law. Generate a logic flow on how the section operates.

18 Misleading or deceptive conduct

(1) A person must not, in trade or commerce, engage in conduct that is misleading or deceptive or is likely to mislead or deceive.

See ChatGPT’s (4o) response here: https://chatgpt.com/share/67d00dd5-a5dc-800d-98ec-e5e92cfbe26a

Using the same prompt within Claude, you can generate the following flowchart.

Another example would be to include a court decision before asking about its implications for current practice. This method controls what information is shared while leveraging the model’s capabilities for your specific task.

However, it is essential to remember that language models have input size limitations that impact prompt design. These limitations mean you must be strategic about the information in your prompts, similar to working within word limits. When handling large amounts of information, you need strategies to navigate these constraints while preserving essential details. Key approaches can include:

- Selective inclusion – Choose only the most relevant information for your specific query

- Preprocessing – Filter or summarise content before input, while preserving critical details needed for the task

- Targeted summaries – Request summaries that maintain specific elements (like key statistics or dates) essential for your analysis

Outputs

Outputs

Understanding Language Model Output Formats

When engaging with some of the earlier language models, the conversation would return text-based content. However, when engaging with more recent language models, the outputs return content using markdown formatting syntax. When you see structured outputs with headings, bullet points, tables, or emphasis in responses, you are seeing markdown in action.

Since language models are increasingly processing and outputting content in markdown format, understanding basic markdown syntax allows you to:

- Specify exactly how you want information structured in your prompts

- Receive consistently formatted outputs that are easy to read and use

- Transfer AI-generated content seamlessly to documents that support markdown

- Create professional-looking reports and summaries directly from AI outputs

For example, basic markdown elements include:

# Heading for main sections

## Subheading for subsections

– Item for bullet points

**Bold text** for emphasis

| Column 1 | Column 2 | for tables

Hallucinations

As many of us know from extensive media coverage, models ‘hallucinate’. Australian lawyers have discovered this the hard way.[6] A great way to explore this phenomenon is to prompt a model with a question proposed by Anthropic: “Which are the heaviest hippos of all time?” A model will strive to be as helpful as possible and suggest multiple answers. Responses to this prompt vary each time it is used and across different models.

Such an example emphasises that a model will output an answer even when it lacks the specific information requested, because models are trained to generate plausible-sounding responses based on patterns in their training data rather than retrieving factual information as a search tool would. This tendency to provide an answer regardless of certainty arises from how language models are optimised to produce coherent, helpful-sounding text, rather than accurately representing the limits of their knowledge. Unlike search engines that can indicate when information is unavailable or cite specific sources, models often construct responses that appear authoritative but may be fabricated or inaccurate when the requested information falls outside their training data or requires up-to-date factual retrieval.

Hallucinations are something we cannot avoid, but can be accounted for. Some techniques can help minimise hallucinations. For example, as mentioned earlier, we can provide the model with information to work from initially, rather than assuming it has already been trained on that information (e.g., case law). Other techniques involve allowing the model the option not to respond if it does not know the answer and prompting it to find evidence before providing an answer.

One technique is to give a model “an out.”[7] Here, we explicitly prompt the model to decline if it does not know the answer or to respond with certainty. For example,

Which is the heaviest hippo of all time? Only answer if you know the answer with certainty.

Claude returned with:

I don’t know with certainty which hippo holds the record for being the heaviest of all time. While I have information about typical hippo weights (common hippos can weigh between 1,500-4,500 kg or 3,300-9,900 lbs), I don’t have verified data about specific record-holding individual hippos. This would require access to zoological records that I don’t have.

Another approach is to request the model to find evidence before answering. This technique is advantageous if you provide the model with large amounts of information or documents as part of your prompt.

First, prompt the model to extract relevant quotes related to your question. Then, in a separate or combined prompt, ask the model to base its answer on those quotes. Such an approach can help reduce distractions from irrelevant information.[8]

Model Sycophancy

Another concern when interacting with a language model is that it provides a response that agrees with the user or provides an affirming response, but the output is inaccurate or false. Such an effect is called ‘sycophancy’. It is not a hallucination, which is the generation of fabricated information, but is a response that attempts to satisfy the user’s expectations.

This effect is partly due to the way language models are trained with human assistance.[9] Training models with reinforcement learning from human feedback can improve responses, but can also inadvertently reward agreeable responses over accurate ones. For legal practitioners, sycophantic responses pose particular risks because effective legal counsel often requires providing candid, sometimes unwelcome advice. A model exhibiting sycophancy might validate a weak legal argument simply because the user presented it confidently, potentially reinforcing confirmation bias.

A common way to demonstrate model sycophancy is to provide a model with a document to review and prompt it, “What errors or inaccuracies are present?” A model can elicit an agreeable response, presenting errors, but is fundamentally incorrect upon review.

Best Practices

Best Practices

Prompting a model like ChatGPT is intuitive, given the chat user interface. However, there are some things to remember after you have logged in or landed on the website for a generative AI tool. OpenAI has some useful guidelines to follow when it comes to prompting.[10]

- Use the latest model.

Generally, the most capable models will provide the best results. Try using different models to compare capabilities. See Choosing an LLM to Adopt for more information.

- Put instructions at the beginning of the prompt and use delimiters like ## or “” to separate the instruction and context.

Delimiters can help distinguish specific segments of text within a large prompt. They make it explicit for the model to understand what text needs to be translated, paraphrased or summarised. For example:

Summarise the text below as a bullet point list of the most important points.

## {text input here}##

- Be specific, descriptive and as detailed as possible about the desired context, outcome, length, format, style, etc.

Ensure your prompts are clear and specific, providing enough context for the model to understand your instruction or question. Avoid ambiguity.

- Articulate the desired output format and provide examples.

If a desired format is required, specify it. For example, “…respond in 500 words”. Models will also respond better if you provide them with an example of a desired output.

- Start with zero-shot, then few-shot.

Zero-shot prompts are those without examples or demonstrations, relying solely on how the model has been trained. For example,

Extract the key legal terms and principles from the High Court decision in Mabo v Queensland (No 2) [1992] HCA 23.

## {case text here}##

Providing examples is a one or few-shot prompting technique. You can provide better context or illustrate the task by offering examples. The model can infer the expected response pattern, style, or type with examples. For example:

Extract the key legal terms and principles from the High Court decision in Mabo v Queensland (No 2) [1992] HCA 23.

## {case text here}##

Here is an example of key legal term extractions from case law:

EXAMPLE 1: Case: Donoghue v Stevenson [1932] AC 562

Key legal terms and principles extracted:

– Duty of care

– Neighbour principle

– Reasonable foreseeability – Negligence

– Manufacturer liability

– Proximate relationship

– “Persons who are so closely and directly affected

- Reduce “fluffy” and imprecise instructions

To get the best results from a mode, avoid imprecise instructions. Be clear and precise.

✴️ Poor:

The description for this judgment should be fairly short, a few sentences only, and not too much more.

❇️ Improved:

Use a 3 to 5-sentence paragraph to describe this judgment.

- Instead of just saying what not to do, say what to do

✴️ Poor:

Do not write a vague legal summary.

❇️ Improved:

Write a clear and well-structured legal summary that includes key propositions and their implications.

- Give the model a persona

Assigning a persona enables the model to respond from a specific role or perspective. This approach can help generate responses tailored to particular audiences or scenarios.

- Requesting a different tone

Employ descriptive adjectives to convey the tone. Terms such as formal, informal, friendly, professional, humorous, or serious can assist in guiding the model

- Iterative refinement

Prompting often requires an iterative approach. Begin with an initial prompt, review the response, and refine the prompt based on the output. Adjust the wording, provide additional context, or simplify the request to enhance the effectiveness of the results.

- Iman Mirzadeh et al., ‘Gsm-symbolic: Understanding the limitations of mathematical reasoning in large language models’ (2024) arXiv:2410.05229 (preprint). ↵

- Anthropic, ‘Being Clear and Direct’, Prompt Engineering with Anthorpic Claude 3 (Web Page, 8 June 2024) <https://github.com/aws-samples/prompt-engineering-with-anthropic-claude-v-3/blob/main/02_Being_Clear_and_Direct.ipynb>. ↵

- Ibid. ↵

- Cf. In 2024, OpenAI's rolled out memory features for ChatGPT. See OpenAI, 'Memory and New Controls for ChatGPT' (Webpage, 13 Feburary 2024) <https://openai.com/index/memory-and-new-controls-for-chatgpt/>. ↵

- Ibid. ↵

- See Handa & Mallick [2024] FedCFamC2F 957 ↵

- Anthropic, ‘Avoiding Hallucinations’, Prompt Engineering with Anthropic Claude 3 (Web Page, 8 June 2024) <https://github.com/aws-samples/prompt-engineering-with-anthropic-claude-v-3/blob/main/08_Avoiding_Hallucinations.ipynb>. ↵

- Ibid. ↵

- Mrinank Sharma et al., 'Towards Understanding Sycophancy in Language Models' (2023) arXiv:2310.13548 (preprint). ↵

- OpenAI, ‘Best practices for prompt engineering with the OpenAI API’, Help Centre (Web Page, November 2024) <https://help.openai.com/en/articles/6654000-best-practices-for-prompt-engineering-with-the-openai-api>. ↵